To most effectively deliver aid to alleviate poverty, you’ve to know where the people most in need are. In many countries, this is commonly done with household surveys. But these are often infrequent and canopy limited locations.

Recent advances in artificial intelligence (AI) have created a step change in the way to measure poverty and other human development indicators. Our team has used a variety of AI often known as a deep convolutional neural network (DCNN) to check satellite imagery and discover some varieties of poverty with a level of accuracy near that of household surveys.

The use of this AI technology could help, for instance, in developing countries where there was a rapid change of land use. The AI could monitor via satellite and potentially spot areas which can be in need of aid. This can be much quicker than counting on ground surveys.

Plus, the dreamy images our deep learning model has produced give us a novel insight into how AI visualises the world.

Authors/Google, CC BY

A DCNN is a variety of advanced AI algorithm commonly utilized in processing and analysing visual imagery. The “deep” in its name refers back to the multiple layers through which data is processed, making it a part of the broader family of deep learning technologies.

Earlier this 12 months our team made a vital discovery using the DCNN. This network was initially trained on the vast array of labelled images from the ImageNet repository: a huge pictorial dataset of objects and living things used to coach algorithms. After this initial phase, where the network learned to recognise various objects, we fine-tuned it using daylight satellite images of populated places.

Our findings revealed that the DCNN, enhanced by this specialised training, could surpass human performance in accurately assessing poverty levels from satellite imagery. Specifically, the AI system demonstrated a capability to deduce poverty levels from low-resolution daytime satellite images with greater precision than humans analysing high-resolution images.

Such proficiency echoes the superhuman achievements of AI in other realms, resembling the Chess and Go engines that consistently outwit human players.

After the training phase was complete, we engaged in an exploration to try to know what characteristics the DCNN was identifying within the satellite images as being indicative of “high wealth”. This process began with what we known as a “blank slate” – a picture composed entirely of random noise, devoid of any discernible features.

In a step-by-step manner, the model “adjusts” this noisy image. Each adjustment is a move towards what the model considers a satellite image of a more wealthy place than the previous image. These modifications are driven by the model’s internal understanding and learning from its training data.

As the adjustments proceed, the initially random image progressively morphs into one which the model confidently classifies as indicating high wealth. This transformation was revelatory since it unveiled the particular features, patterns, and elements that the model associates with wealth in satellite imagery.

Such features might include (but will not be limited to) the density of roads, the layout of urban areas, or other subtle cues which were learned through the model’s training.

Authors/Google, CC BY

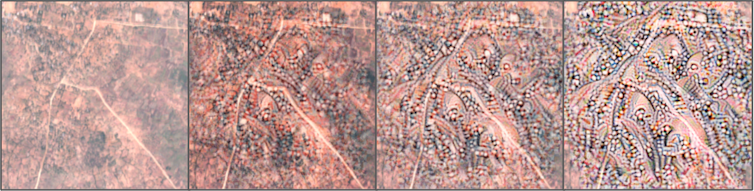

The sequence of images displayed above serves an important purpose in our research. It begins with a baseline satellite image of a village in Tanzania, which our AI model categorises as “poor”, probably on account of the sparse presence of roads and buildings.

To test and make sure this hypothesis, we progressively modify each subsequent image within the sequence, methodically enhancing them with additional features resembling buildings and roads. These augmentations represent increased wealth and development as perceived by the AI model.

This visual progression shows how the AI is visualising “wealth” as we add things like more roads and houses. The characteristics we deduced from the model’s “ideal” wealth image (resembling roads and buildings) are indeed influential within the model’s assessment of wealth.

This step is important in ensuring that the features we imagine to be significant within the AI’s decision-making process do, actually, correspond to higher wealth predictions.

So by repeatedly adjusting the image, the resulting visualisation progressively evolves into what the network “thinks” wealth looks like. This consequence is commonly abstract or surreal.

Authors, CC BY

The image above was generated from a blank slate after we asked the DCNN what it related to “high wealth”. These images have an ethereal quality and don’t closely resemble typical daytime satellite photos. Yet, the presence of “blobs” and “lines” suggests clusters of homes interconnected by roads and streets. The blue hue might even hint at coastal areas.

Dreamy images

Inherent on this method is a component of randomness. This randomness ensures that every attempt at visualisation creates a novel image, though all are anchored in the identical underlying concept as understood by the network.

However, it is crucial to notice that these visualisations are more a mirrored image of the network’s “thought process” reasonably than an objective representation of wealth. They’re constrained by the network’s training and should not accurately align with human interpretations.

It is crucial to know that while AI feature visualisation offers intriguing insights into neural networks, it also highlights the complexities and limitations of machine learning in mirroring human perception and understanding.

Understanding poverty, particularly in its geographical or regional context, is a posh endeavour. While traditional studies have focused more on individual points of poverty, AI, leveraging satellite imagery, has made significant strides in highlighting regional poverty’s geographical patterns.

This is where the true value of AI in poverty assessment lies, in offering a spatially nuanced perspective that enhances existing poverty research and aids in formulating more targeted and effective interventions.

This article was originally published at theconversation.com