Researchers published a study comparing the accuracy and quality of summaries produced by LLMs. Claude 3 Opus performed particularly well, however the humans still got here out on top.

AI models are extremely useful for summarizing long documents whenever you haven’t got the time or inclination to read them.

The luxury of accelerating context windows means we will goal models with longer documents, which tests their ability to at all times clearly present the facts within the summary.

The researchers from the University of Massachusetts Amherst, Adobe, the Allen Institute for AI and Princeton University, published a study The goal was to learn the way good AI models are at summarizing book-length content (>100,000 tokens).

FABLES

They chosen 26 books from 2023 and 2024 and had the texts summarized by various LLMs. The current publication dates were chosen to avoid potential data contamination within the models’ original training data.

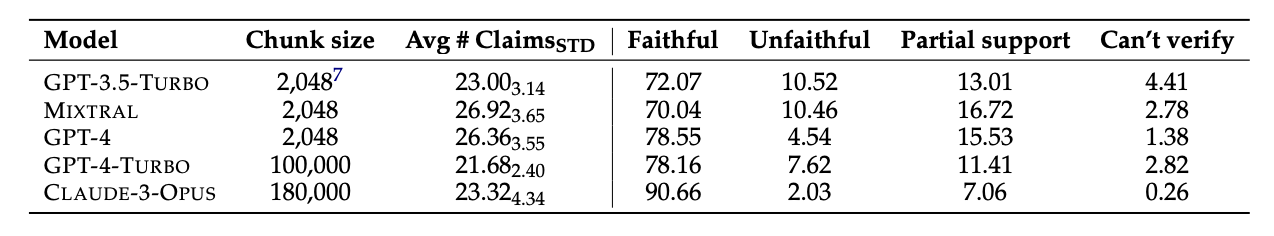

After the models created the summaries, they extracted decontextualized claims from them using GPT-4. The researchers then hired human commentators who had read the books and asked them to fact-check the claims.

The resulting data was compiled right into a dataset called Faithfulness Annotations for Book-Length Summarization (FABLES). FABLES accommodates 3,158 claims-level notes on faithfulness in 26 narrative texts.

The test results showed that Claude 3 Opus was “by far probably the most faithful book summary volume,” with over 90% of its claims confirmed as truthful or accurate.

GPT-4 got here in a distant second, with only 78% of its claims confirmed to be true by human annotators.

The hard part

The models tested all looked as if it would struggle with the identical problems. Most of the facts that the models got mistaken related to events or states of characters and relationships.

The paper states that “most of those claims can only be rebutted through multi-hop reasoning of the evidence, highlighting the complexity of the duty and its difference from existing fact-checking environments.”

LLMs also often omitted critical information from their summaries. They also place an excessive amount of emphasis on the content towards the top of the books and miss vital content originally.

Will AI replace human annotators?

Human commenters or fact checkers are expensive. The researchers spent $5,200 for human annotators to confirm the claims within the AI summaries.

Could an AI model have done this job for less money? Simple fact-finding is something Claude 3 does well, but its performance at verifying claims that require a deeper understanding of the content is less consistent.

When all AI models were presented with the extracted claims and asked to review them, they were insufficient to achieve human commenters. They performed particularly poorly at identifying unfaithful claims.

Although Claude 3 Opus was by far the most effective claims verifier, the researchers concluded that it “ultimately performed too poorly to be a reliable automated evaluator.”

When it involves understanding the nuances, complex human relationships, storylines, and character motivations in a long-form narrative, humans still appear to have the sting for now.

This article was originally published at dailyai.com