Just over a decade ago, artificial intelligence (AI) made one among its showier forays into the general public’s consciousness when IBM’s Watson computer appeared on the American quiz show Jeopardy! The studio audience was made up of IBM employees, and Watson’s exhibition performance against two of the show’s most successful contestants was televised to a national viewership across three evenings. In the top, the machine triumphed comfortably.

One of Watson’s opponents Ken Jennings, who went on to make a profession on the back of his gameshow prowess, showed grace – or was it deference? – in defeat, jotting down this commentary to accompany his final answer: “I, for one, welcome our recent computer overlords.”

In fact, his phrase had been poached from one other American television mainstay, The Simpsons. Jennings’ wry popular culture reference signalled Watson’s reception less as computer overlord and more as technological curio. But that was not how IBM saw it. On the back of this very public success, in 2011 IBM turned Watson toward some of the lucrative but untapped industries for AI: healthcare.

What followed over the subsequent decade was a series of ups and downs – but mostly downs – that exemplified the promise, but additionally the many shortcomings, of applying AI to healthcare. The Watson health odyssey finally resulted in 2022 when it was sold off “for parts”.

There is far to learn from this story about why AI and healthcare seemed so well-suited, and why that potential has proved so difficult to understand. But first we want to revisit the controversial origins of information use on this field, long before electronic computers were invented, and meet one among its American pioneers, Ernest Amory Codman – an elite by birth, a surgeon by training, and a provocateur by nature.

Data’s role within the birth of contemporary medicine

While the utility of information in a general way had already been clear for several centuries, its collection and use on a large scale was a feature of the nineteenth century. By the 1850s, collecting census data had change into commonplace. Its use was not merely descriptive; it formed a technique to make determinations about tips on how to govern.

The nineteenth century marked the primary time that, as US systems expert Shawn Martin explains, “managers felt the necessity to tie the knowledge that society collected to things like performance [and] productivity”. This applied to public health as well, where “big data” played a critical role in establishing relationships between populations, their habits and environment (each at home and work), and disease.

Wikimedia

A well known example is John Snow’s discovery of the source of a cholera outbreak in London’s Soho neighbourhood in 1854. Now considered one among epidemiology’s founding fathers, Snow canvassed door to door asking whether the families inside had had cholera. His evaluation got here chiefly within the re-organisation of the info he collected – its plotting on a map – such that a pattern might emerge. This ultimately established not only the extent of the outbreak but additionally its source, the Broad Street water pump.

For Boston-born Codman, an outspoken medical reformer working in the beginning of the twentieth century, such use of information to know disease was up there as “one among the best moments in medicine”.

Though Codman was involved in many data-driven reforms during his controversial profession, some of the successful was the Registry of Bone Sarcoma, which he established in 1920. His goal was to gather and analyse the entire cases of bone cancer (or suspected bone cancer) from across the US, and to make use of these to ascertain diagnostic criteria, therapeutic effectiveness and a standardised nomenclature.

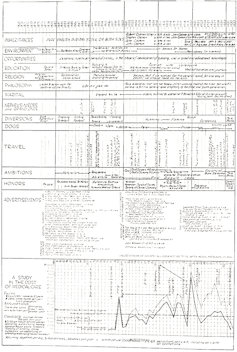

There were a couple of rules for this registry. Individual doctors who contributed needed to send x-rays, case reports and, if possible, tissue samples for examination by the registry’s consulting pathologists and Codman himself. This would ensure each the accuracy and uniformity of pathological evaluation. The effort was a hit which grew over time: by 1954, when the American College of Surgeons sought a brand new home for the registry, it contained a powerful 2,400 complete, cross-referenced cases.

National Library of Medicine

On the face of it, Codman’s decision to concentrate on bone cancer was baffling. It was neither a pressing nor a standard concern for doctors across the US. But the disease’s relative rarity was one reason he selected it. Codman felt the quantity of information received from his nationwide request wouldn’t be overwhelming for his small team of researchers to analyse.

Perhaps more importantly, he knew that studying bone cancer would raise the ire of far fewer of his colleagues than a more common disease might. In a clinical atmosphere by which expertise was understood as a mixture of long experience with a touch of intuition – the physician’s “art” – Codman’s touting of information as a greater technique to obtain knowledge a couple of disease and its treatment was already being met with vociferous opposition.

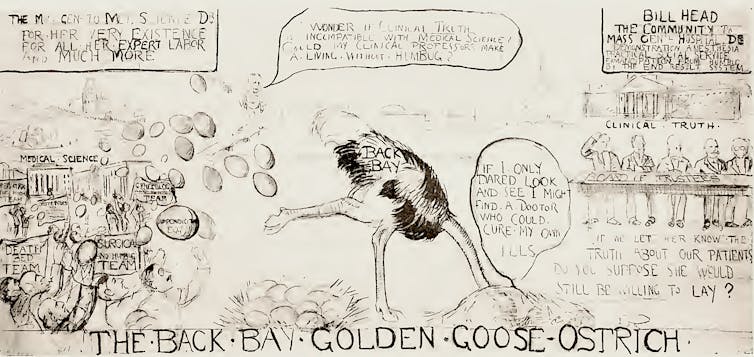

It didn’t help that he tended to be inflammatory and provocative within the pursuit of his data-driven goals. At a medical meeting in Boston in 1915, he launched a surprise attack on his fellow practitioners. In the center of this staid affair, Codman unveiled an 8ft cartoon lampooning his colleagues for his or her apathy toward healthcare reform and, as he saw it, their wilful ignorance of the constraints of the occupation. As one (former) friend put it within the event’s aftermath, Codman’s only hope was that individuals would take the “charitable” view and consider him not an enemy of the occupation but merely “mentally deranged”.

From The Shoulder by E.A. Codman

Undeterred, Codman continued this pugnacious approach to his pioneering work. In a 1922 letter to the distinguished Boston Medical and Surgical Journal, he complained that the surgeons of Massachusetts had been particularly unhelpful to his registry. He explained that he had – politely – asked the 5,494 physicians within the state to “drop him a postal stating whether or not he knew of a case” in order that Codman could acquire “the very best statistics ever obtained on the frequency of the disease”. To his chagrin, he had received only 19 responses in nearly two years. Needling the journal’s editors and readers concurrently, he asked:

Is this because your Journal will not be read? … [Or] due to indifference of the medical occupation as as to whether the frequency of bone sarcoma is understood or not?

Codman proposed a questionnaire that might allow the journal to see whether the issue was its lack of readership, or his colleagues’ “inertia, procrastination, disapproval, opposition or disinterest”. A subsequent editorial in response to Codman’s proposal was surprisingly magnanimous:

Whether we’ll it or not, we’re obliged to be irritated, amused or instructed, in keeping with our temperaments, by Dr Codman. Our advice is to be instructed.

An end to elitism?

Despite the establishment’s resistance, submissions to Codman’s registry began to grow such that by 1924, he had enough material to make preliminary comments about bone cancer. For one thing, he had succeeded in standardising the much-contested matter of the correct nomenclature for the disease. This, he exulted, was so significant that it must be likened to the “rising of the sun”.

From The Shoulder by E.A.Codman

The registry also offered up many pieces of “impersonal proof”, as Codman called his data-driven findings, of the rightness of certain theories that individual physicians had promoted. Claims, for instance, that combined treatments of “surgery, mixed toxins and radium” were more practical than treatments that relied on any of those alone were borne out by the info.

The registry, as Codman’s colleague Joseph Colt Bloodgood put it, “excited great interest” amongst practitioners, and never simply because it had “influenced the complete medical world to pay more attention to bone tumours”. More importantly, it provided a brand new model for tips on how to do medical work. Another admiring colleague responded to Bloodgood:

The work of the registry [is] one among the outstanding American contributions to surgical pathology. As a technique of study, it shows the need of very wide experience before a surgeon is able to handling intelligently cases of this disease … [It] is unimaginable for any single individual to assert finality of this kind.

This emphasis on “very wide experience” over the experience of “any single individual” points to a different critical reason to prefer data, in keeping with Codman. His goal in changing the tactic by which medical knowledge was made was not only to recover results. By looking for to undo the image of medication as an “art” that relied on the wisdom of a select group of preternaturally talented individuals, Codman also threatened to undo the class-ridden reality that underlay this public veneer.

As the efficiency engineer Frank Gilbreth implied in a 1913 article in the American Magazine, if it was true that medicine required no specific intrinsic gifts (monetary or otherwise), then absolutely anybody – whatever their class, race or background – could do it, including “bricklayers, shovellers and dock-wallopers” who were currently shut out of such “high-brow” occupations.

Codman was much more pointed. If data was used to judge the outcomes of his physician colleagues, he insisted, it will show that the standard of doctors and hospitals was generally poor. He sniped that they excelled chiefly in “making dying men think they’re recovering, concealing the gravity of significant diseases, and exaggerating the importance of minor illnesses to suit the occasion”.

Detroit Publishing Company/Wikimedia

“Nepotism, pull and politics” were the order of the day in medicine, Codman wrote in one among his most scathing takedowns of his colleagues on the Massachusetts General Hospital. Yet he made himself the centrepiece of this critique, conceding that his entrance to Harvard Medical School had come on the back of “friends and relatives among the many well-to-do”. The only difference, he suggested, was that he was willing to come clean with it, and to subject himself and his work to the scrutiny of information.

Data’s unflattering view of medication

Codman was not the one person having a come-to-Jesus moment with data over this era. In the Twenties, the American social science researchers Robert and Helen Lynd collected data within the small US town of Muncie, Indiana, as a way of making an image of the “averaged American”.

By the Thirties, the similarly-minded Mass Observation project took off in Britain, meaning to collect data about on a regular basis life in order to create an “anthropology of ourselves”. Crucially, each reflected the considering that also drove Codman: that the appropriate technique to know something – a people, a disease – was to supply what seemed a suitably representative average. And this meant the amalgamation of often quite diverse and wide-ranging characteristics and their compression right into a single, standard, efficient unit.

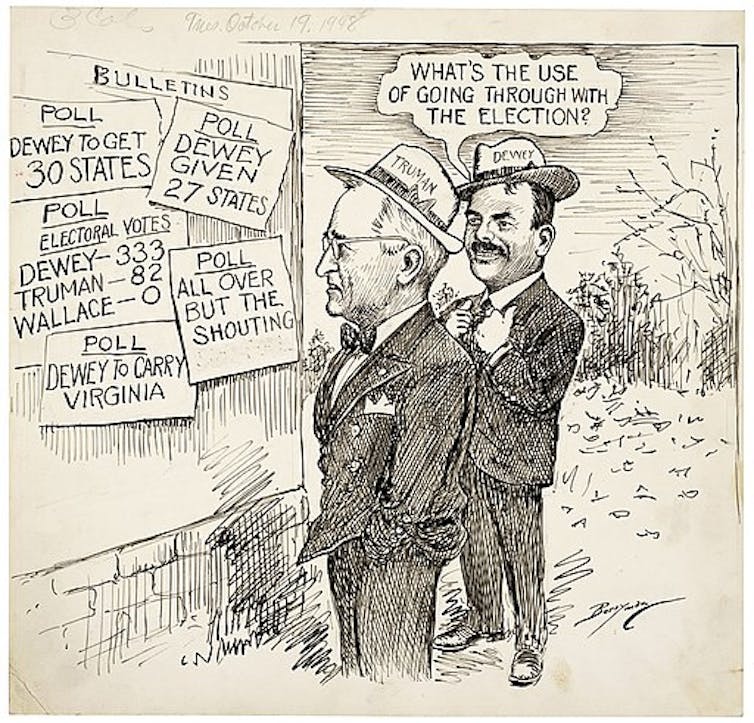

The turn from describing representative averages to learning from these averages might be best articulated within the work of pollsters, whose door-to-door interrogations were geared toward helping a nation to know itself by statistics. In 1948, inspired by their failure to appropriately predict the final result of the US presidential election – one among the most famous psephological errors within the nation’s history – pollsters akin to George Gallup and Elmo Roper began to rethink their analytic methods, spinning away from quota sampling and towards random sampling.

Clifford K. Berryman/Wikimedia

At the identical time, thanks primarily to its military applications, the science of computing began to collect pace. And the growing fascination with knowing the world via data combined with the unparalleled ability of computers to crunch it appeared a match made in heaven.

In a late-in-life preface to his 1934 data-driven magnum opus on the anatomy of the shoulder, Codman had comforted himself with the thought that he was a person ahead of his time. And indeed, just a couple of years after his death in 1940, statistical evaluation began to choose up steam in medicine.

Over the subsequent 20 years, figures akin to Sir Ronald Fisher, the geneticist and statistician remembered for suggesting randomisation as an antidote to bias, and his English compatriot Sir Austin Bradford Hill, who demonstrated the connection between smoking and lung cancer, also pushed forward the combination of statistical evaluation into medicine.

Cardiff University Library/Cochrane Archive

However, it will take many more years for word to finally leak out that, by data’s measure, each the methodologies of medical research and far of medication itself was ineffective. In a movement led partly by outspoken Scottish epidemiologist Archie Cochrane, this unflattering statistical view of medication finally really saw the sunshine of day within the Sixties and 70s.

Cochrane went up to now as to say that medicine was based on “a level of guesswork” so great that any return to health after a medical intervention was more a “tribute to the sheer survival power of the minds and bodies” of patients than the rest. Aghast on the revelations embedded in Cochrane’s 1972 book, Random Reflections on Health Services, the Guardian journalist Ann Shearer wrote:

Isn’t it … greater than fair to ask what on Earth we – and more particularly, the medical They – have been doing all these years to let the health machine develop with such a scarcity of quality control?

The answer dates back to Codman’s bone cancer registry half a century earlier. The medical establishment on each side of the Atlantic had been avoiding with all their might the scrutiny that data would bring.

Computers finally acquire medical currency

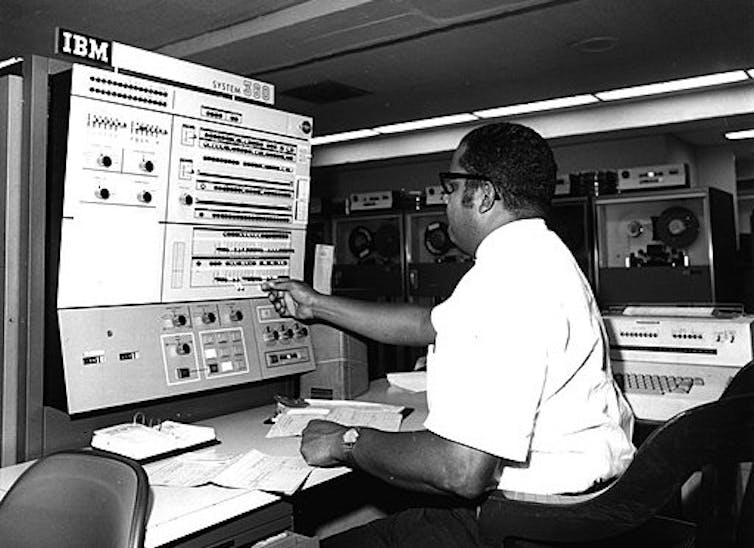

Despite their increasing ubiquity within the Nineteen Seventies and 80s, computers had still only haltingly joined the medical mainstream. Though a smattering of AI applications started to appear in healthcare within the Nineteen Seventies, it was only within the Nineties that computers really began to accumulate some medical currency.

In a page borrowed straight from Codman’s time, the pioneering American biomedical informatician Edward Shortliffe noted in 1993 that the way forward for AI in medicine relied on the realisation that “the practice of medication is inherently an information-management task”.

In the US, the Institute of Medicine and the President’s Information Technology Advisory Council released reports highlighting the failures of medication to completely embrace information technology. By 2004, a newly appointed national coordinator for health information technology was charged with the herculean task of building an electronic medical record for all Americans by 2014.

USDA Forest Service via Wikimedia Commons

This explosion of interest in bringing computers into healthcare made it an attractive and potentially lucrative area for investment. So it isn’t any surprise that IBM celebrated Watson’s winning activate Jeopardy! in 2011 by putting it to work on an oncology-focused programme with multiple US-based clinical partners chosen on the premise of their access to medical data.

The idea was laudable. Watson would do what machine learning algorithms do best: mining the large amounts of information these institutions had at their disposal, trying to find patterns that might help to enhance treatment. But the complexity of cancer and the frustratingly unique responses of patients to it, yoked together by data systems that were sometimes incomplete and sometimes incompatible with one another or with machine learning’s methods more generally, limited Watson’s ability to be useful.

One sorry example was Watson’s Oncology Expert Advisor, a collaboration with the MD Anderson Cancer Center in Houston, Texas. This had begun its life as a “bedside diagnostic tool” that pored through patient records, scientific literature and doctors’ notes to be able to make real-time treatment recommendations. Unfortunately, Watson couldn’t “read” the doctors’ notes. While good at mining the scientific literature, it couldn’t apply these large-scale discussions to the specifics of the individuals in front of it. By 2017, the project had been shelved.

Elsewhere, at New York City’s famed Memorial Sloan Kettering Cancer Center, clinicians found a more elaborate – and infinitely more problematic – way forward. Rather than counting on the retrospective data that’s machine learning’s usual fodder, clinicians invented recent “synthetic” cases that were, by virtue of getting been invented, infinitely less messy and more complete than any real data could possibly be.

The project re-litigated the “data v expertise” debate of Codman’s time – over again in Codman’s favour – since this invented data had built into it the specifics of cancer treatment as understood by a small group of clinicians at a single hospital. Bias, in other words, was programmed directly in, and people engaged in training the system knew it.

Viewing historical patient data as too narrow, they rationalised that replacing this with data that reflected their very own collective experience, intuition and judgment could construct into Watson For Oncology the newest and best treatments. Of course, this didn’t work any higher within the early twenty first century than it had within the early twentieth.

Clockready/Wikimedia, CC BY-SA

Furthermore, while these clinicians sidestepped the issue of real data’s impenetrable messiness, treatment options available at a wealthy hospital in Manhattan were far faraway from those available in the opposite localities that Watson was meant to serve. The contrast was perhaps starkest when Watson was introduced to other parts of the world, only to seek out the treatment regimens it advisable either didn’t exist or weren’t in line with the local and national infrastructures governing how healthcare was done there.

Even within the US, the consensus, as one unnamed physician in Florida reported back to IBM, was that Watson was a “piece of shit”. Most of the time, it either told clinicians what they already knew or offered up advice that was incompatible with local conditions or the specifics of a patient’s illness. At best, it offered up a snapshot of the views of a select few clinicians at a moment in time, now reified as “facts” that should apply uniformly and all over the place they went.

Many of the elegies written to mark Watson’s selling-off in 2022, having didn’t make good on its promise in healthcare, attributed its downfall to the identical form of overpromise and under-delivery that has spelled the top for a lot of health technology start-ups.

Some maintained that the scaling-up of Watson from gameshow savant to oncological wunderkind may need been successful with more time. Perhaps. But in 2011, time was of the essence. To capitalise on the goodwill toward Watson and IBM that Jeopardy! had created, to be the trailblazer into the lucrative but technologically backward world of healthcare, had meant striking first and fast.

Watson’s high-profile failure highlights an missed barrier to modern, data-driven healthcare. In its encounters with real, human patients, Watson stirred up the identical anxieties that Codman had encountered – difficult questions on what it is precisely that medicine produces: care, and the human touch that comes with it; or cure, and the knowledge management tasks that play a critical role here?

A 2019 study of US patient perspectives of AI’s role in healthcare gave these concerns some statistical shape. Though some felt optimistic about AI’s potential to enhance healthcare, a overwhelming majority gave voice to fundamental misgivings about relinquishing medicine to machine learning algorithms that might not explain the logic they employed to succeed in their diagnosis. Surely the absence of a physician’s judgment would increase the chance of misdiagnosis?

The persistence of this worry has very often resulted in caveating the work of machine learning with reassurances that humans are still in charge. In a 2020 report on the InnerEye project, for instance, which used retrospective data to discover tumours on patient scans, Yvonne Rimmer, a clinical oncologist at Addenbrooke’s Hospital in Cambridge, addressed this concern:

It’s essential for patients to know that the AI helps me in my skilled role. It’s not replacing me in the method. I doublecheck all the things the AI does, and may change it if I want to.

Data’s uncertain role in the long run of healthcare

Today, whether a health care provider gives you your diagnosis otherwise you get it from a pc, that diagnosis will not be based on the intuition, judgment or experience of either doctor or patient. It’s driven by data that has made our cultures of mainstream care relatively more uniform and of the next standard. Just as Codman foresaw, the introduction of information in medicine has also forced a greater degree of transparency, each by way of methodologies and effectiveness.

However, the more essential – and potentially intractable – problem with this contemporary approach to health is its lack of representation. As the Sloan Kettering dalliance with Watson began to indicate, datasets are usually not the “impersonal proofs” that Codman took them to be.

Even under less egregiously subjective conditions, data undeniably replicates and concretises the biases of society itself. As MIT computer scientist Marzyeh Ghassemi explains, data offers the “sheen of objectivity” while replicating the ethnic, racial, gender and age biases of institutionalised medicine. Thus the tools, tests and techniques which can be based on this data are also not impartial.

Ghassemi highlights the inaccuracy of pulse oximeters, often calibrated on light-skinned individuals, for those with darker skin. Others might note the outcry over the gender bias in cardiology, spelled out especially in higher mortality rates for girls who’ve heart attacks.

The list goes on and on. Remember the human genome project, that big data triumph which has, in keeping with the US National Institutes of Health website, “accelerated the study of human biology and improved the practice of medication”? It almost exclusively drew upon genetic studies of white Europeans. According to Esteban Burchard on the University of California, San Francisco:

96% of genetic studies have been done on individuals with European origin, although Europeans make up lower than 12% of the world’s population … The human genome project must have been called the European genome project.

A scarcity of representative data has implications for giant data projects across the board – not least for precision medicine, which is widely touted because the antidote to the issues of impersonal, algorithm-driven healthcare.

Precision or “personalised” medicine seeks to deal with one among the essential perceived drawbacks of data-based medicine by locating finer-grained commonalities between smaller and smaller subsets of the population. By specializing in data at a genetic and cellular level, it could yet counter the criticism that the data-driven approach of recent a long time is just too blunt and insensitive a tool, such that “even probably the most steadily pharmaceuticals for probably the most common conditions have very limited efficacy”, in keeping with computational biologist Chloe-Agathe Azencott.

But personalised medicine still feeds on the identical depersonalised data as medicine more generally, so it too is handicapped by data’s biases. And even when it could step beyond the issues of biased data – and, indeed, institutions – the query of its role in the long run of our on a regular basis healthcare doesn’t end there.

Even taking the utopian view that personalised medicine might make possible treatments as individual as we’re, pharmaceutical firms won’t develop these treatments unless they’re profitable. And that requires either prices so high that only the wealthiest of us could afford them, or a market so big that these firms can “achieve the requisite return on investment”. Truly individualised care will not be really on the table.

If our goal in healthcare is to assist more people by being more representative, more inclusive and more attentive to individual difference within the medical on a regular basis of diagnosis and treatment, big data isn’t going to assist us out. At least not as things currently stand.

For the story of healthcare data so far has pointed us squarely in the opposite direction, towards homogenisation and standardisation as medical goals. Laudable because the rationales for such a spotlight for medicine have been at different moments in our history, our expectations for the potential for machine learning to enable all of us to live longer, healthier lives remain something of a pipe dream. Right now it continues to be us humans, not our computer overlords, who hold most sway over our individual health outcomes.

This article was originally published at theconversation.com