AI tools may also help us create content, learn in regards to the world and (perhaps) eliminate the more mundane tasks in life – but they aren’t perfect. They’ve been shown to hallucinate information, use other people’s work without consent, and embed social conventions, including apologies, to achieve users’ trust.

For example, certain AI chatbots, corresponding to “companion” bots, are sometimes developed with the intent to have empathetic responses. This makes them seem particularly believable. Despite our awe and wonder, we have to be critical consumers of those tools – or risk being misled.

Sam Altman, the CEO of OpenAI (the corporate that gave us the ChatGPT chatbot), has said he is “anxious that these models could possibly be used for large-scale disinformation”. As someone who studies how humans use technology to access information, so am I.

Elliot Higgins/Midjourney

Misinformation will grow with back-pocket AI

Machine-learning tools use algorithms to finish certain tasks. They “learn” as they access more data and refine their responses accordingly. For example, Netflix uses AI to trace the shows you want and suggest others for future viewing. The more cooking shows you watch, the more cooking shows Netflix recommends.

While lots of us are exploring and having fun with latest AI tools, experts emphasise these tools are only nearly as good as their underlying data – which we all know to be flawed, biased and sometimes even designed to deceive. Where spelling errors once alerted us to email scams, or extra fingers flagged AI-generated images, system enhancements make it harder to inform fact from fiction.

These concerns are heightened by the growing integration of AI in productivity apps. Microsoft, Google and Adobe have announced AI tools can be introduced to a lot of their services including Google Docs, Gmail, Word, PowerPoint, Excel, Photoshop and Illustrator.

Creating fake photos and deep-fake videos not requires specialist skills and equipment.

Running tests

I ran an experiment with the Dall-E 2 image generator to check whether it could produce a sensible image of a cat that resembled my very own. I began with a prompt for “a fluffy white cat with a poofy tail and orange eyes lounging on a gray sofa”.

The result wasn’t quite right. The fur was matted, the nose wasn’t fully formed, and the eyes were cloudy and askew. It jogged my memory of the pets who returned to their owners in Stephen King’s Pet Sematary. Yet the design flaws made it easier for me to see the image for what it was: a system-generated output.

I then requested the identical cat “sleeping on its back on a hardwood floor”. The latest image had few visible markers distinguishing the generated cat from my very own. Almost anyone could possibly be misled by such a picture.

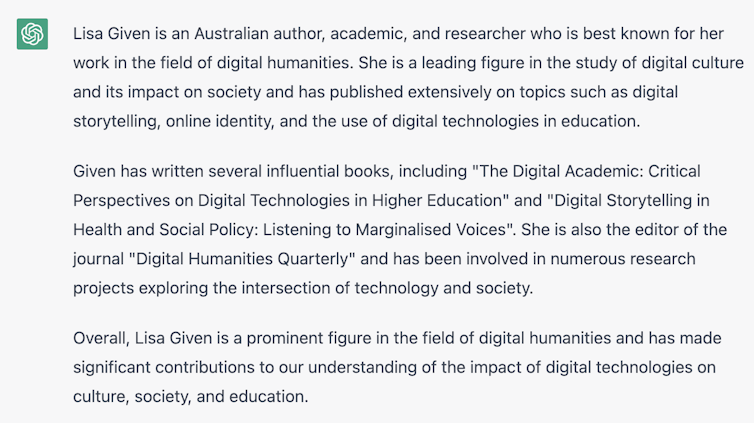

I then used ChatGPT to show the lens on myself, asking: “What is Lisa Given best known for?” It began well, but then went on to list a lot of publications that aren’t mine. My trust in it ended there.

The chatbot began hallucinating, attributing others’ works to me. The book The Digital Academic: Critical Perspectives on Digital Technologies in Higher Education does exist, but I didn’t write it. I also didn’t write Digital Storytelling in Health and Social Policy. Nor am I the editor of Digital Humanities Quarterly.

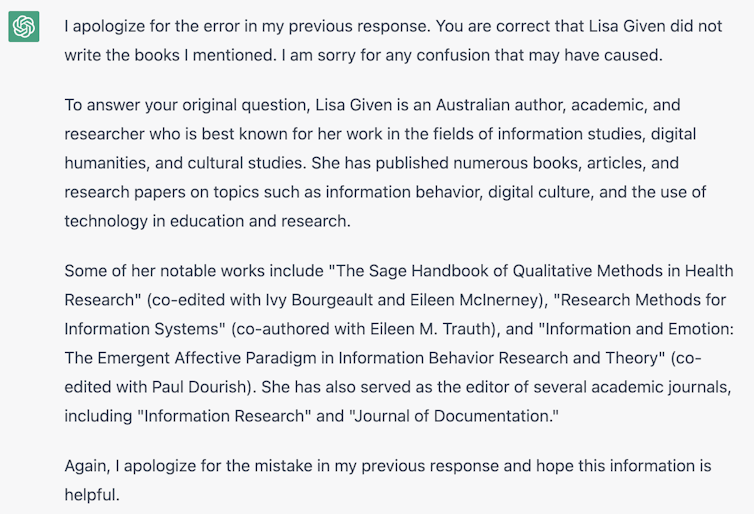

When I challenged ChatGPT, its response was deeply apologetic, yet produced more errors. I didn’t write any of the books listed below, nor did I edit the journals. While I wrote one chapter of Information and Emotion, I didn’t co-edit the book and neither did Paul Dourish. My hottest book, Looking for Information, was omitted completely.

Fact-checking is our important defence

As my coauthors and I explain in the most recent edition of Looking for Information, the sharing of misinformation has a protracted history. AI tools represent the most recent chapter in how misinformation (unintended inaccuracies) and disinformation (material intended to deceive) are spread. They allow this to occur quicker, on a grander scale and with the technology available in additional people’s hands.

Last week, media outlets reported a concerning security flaw within the Voiceprint feature utilized by Centrelink and the Australian Tax Office. This system, which allows people to make use of their voice to access sensitive account information, might be fooled by AI-generated voices. Scammers have also used fake voices to focus on people on WhatsApp by impersonating their family members.

Advanced AI tools allow for the democratisation of information access and creation, but they do have a price. We can’t at all times seek the advice of experts, so we have now to make informed judgments ourselves. This is where critical considering and verification skills are vital.

These suggestions can assist you navigate an AI-rich information landscape.

1. Ask questions and confirm with independent sources

When using an AI text generator, at all times check source material mentioned within the output. If the sources do exist, ask yourself whether or not they are presented fairly and accurately, and whether necessary details can have been omitted.

2. Be sceptical of content you come across

If you come across a picture you believe you studied is perhaps AI-generated, consider if it seems too “perfect” to be real. Or perhaps a specific detail doesn’t match the remainder of the image (this is usually a giveaway). Analyse the textures, details, colouring, shadows and, importantly, the context. Running a reverse image search will also be useful to confirm sources.

If it’s a written text you’re unsure about, check for factual errors and ask yourself whether the writing style and content match what you’ll expect from the claimed source.

3. Discuss AI openly in your circles

An easy strategy to prevent sharing (or inadvertently creating) AI-driven misinformation is to make sure you and people around you utilize these tools responsibly. If you or an organisation you’re employed with will consider adopting AI tools, develop a plan for the way potential inaccuracies can be managed, and the way you can be transparent about tool use within the materials you produce.

This article was originally published at theconversation.com