In 1956, during a year-long trip to London and in his early 20s, the mathematician and theoretical biologist Jack D. Cowan visited Wilfred Taylor and his strange recent “learning machine”. On his arrival he was baffled by the “huge bank of apparatus” that confronted him. Cowan could only stand by and watch “the machine doing its thing”. The thing it seemed to be doing was performing an “associative memory scheme” – it looked as if it would give you the chance to learn the best way to find connections and retrieve data.

It can have looked like clunky blocks of circuitry, soldered together by hand in a mass of wires and boxes, but what Cowan was witnessing was an early analogue type of a neural network – a precursor to probably the most advanced artificial intelligence of today, including the much discussed ChatGPT with its ability to generate written content in response to almost any command. ChatGPT’s underlying technology is a neural network.

As Cowan and Taylor stood and watched the machine work, they really had no idea exactly the way it was managing to perform this task. The answer to Taylor’s mystery machine brain will be found somewhere in its “analog neurons”, within the associations made by its machine memory and, most significantly, within the proven fact that its automated functioning couldn’t really be fully explained. It would take many years for these systems to search out their purpose and for that power to be unlocked.

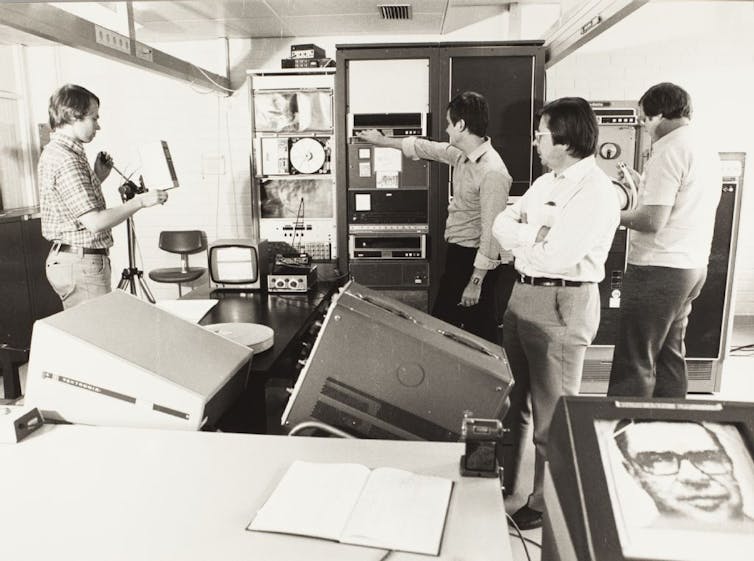

University of Chicago Photographic Archive, Hanna Holborn Gray Special Collections Research Center.

The term neural network incorporates a wide selection of systems, yet centrally, in line with IBM, these “neural networks – also referred to as artificial neural networks (ANNs) or simulated neural networks (SNNs) – are a subset of machine learning and are at the center of deep learning algorithms”. Crucially, the term itself and their form and “structure are inspired by the human brain, mimicking the best way that biological neurons signal to at least one one other”.

There can have been some residual doubt of their value in its initial stages, but because the years have passed AI fashions have swung firmly towards neural networks. They at the moment are often understood to be the long run of AI. They have big implications for us and for what it means to be human. We have heard echoes of those concerns recently with calls to pause recent AI developments for a six month period to make sure confidence of their implications.

It will surely be a mistake to dismiss the neural network as being solely about glossy, eye-catching recent gadgets. They are already well established in our lives. Some are powerful of their practicality. As far back as 1989, a team led by Yann LeCun at AT&T Bell Laboratories used back-propagation techniques to coach a system to recognise handwritten postal codes. The recent announcement by Microsoft that Bing searches will likely be powered by AI, making it your “copilot for the online”, illustrates how the things we discover and the way we understand them will increasingly be a product of this kind of automation.

Drawing on vast data to search out patterns AI can similarly be trained to do things like image recognition at speed – leading to them being incorporated into facial recognition, for example. This ability to discover patterns has led to many other applications, resembling predicting stock markets.

Neural networks are changing how we interpret and communicate too. Developed by the interestingly titled Google Brain Team, Google Translate is one other distinguished application of a neural network.

You wouldn’t wish to play Chess or Shogi with one either. Their grasp of rules and their recall of strategies and all recorded moves signifies that they’re exceptionally good at games (although ChatGPT seems to struggle with Wordle). The systems which might be troubling human Go players (Go is a notoriously tricky strategy board game) and Chess grandmasters, are made out of neural networks.

But their reach goes far beyond these instances and continues to expand. A search of patents restricted only to mentions of the precise phrase “neural networks” produces 135,828 results. With this rapid and ongoing expansion, the possibilities of us having the ability to fully explain the influence of AI may turn into ever thinner. These are the questions I even have been examining in my research and my recent book on algorithmic pondering.

Mysterious layers of ‘unknowability’

Looking back on the history of neural networks tells us something vital in regards to the automated decisions that outline our present or those who may have a possibly more profound impact in the long run. Their presence also tells us that we’re prone to understand the selections and impacts of AI even less over time. These systems will not be simply black boxes, they will not be just hidden bits of a system that may’t be seen or understood.

It is something different, something rooted within the goals and design of those systems themselves. There is a long-held pursuit of the unexplainable. The more opaque, the more authentic and advanced the system is considered. It is just not just in regards to the systems becoming more complex or the control of mental property limiting access (although these are a part of it). It is as a substitute to say that the ethos driving them has a specific and embedded interest in “unknowability”. The mystery is even coded into the very form and discourse of the neural network. They include deeply piled layers – hence the phrase deep learning – and inside those depths are the much more mysterious sounding “hidden layers”. The mysteries of those systems are deep below the surface.

There is an excellent likelihood that the greater the impact that artificial intelligence involves have in our lives the less we’ll understand how or why. Today there’s a powerful push for AI that’s explainable. We wish to know the way it really works and the way it arrives at decisions and outcomes. The EU is so concerned by the doubtless “unacceptable risks” and even “dangerous” applications that it’s currently advancing a brand new AI Act intended to set a “global standard” for “the event of secure, trustworthy and ethical artificial intelligence”.

Those recent laws will likely be based on a necessity for explainability, demanding that “for high-risk AI systems, the necessities of top of the range data, documentation and traceability, transparency, human oversight, accuracy and robustness, are strictly obligatory to mitigate the risks to fundamental rights and safety posed by AI”. This is just not nearly things like self-driving cars (although systems that ensure safety fall into the EU’s category of high risk AI), it is usually a worry that systems will emerge in the long run that may have implications for human rights.

This is an element of wider calls for transparency in AI in order that its activities will be checked, audited and assessed. Another example can be the Royal Society’s policy briefing on explainable AI wherein they indicate that “policy debates internationally increasingly see calls for some type of AI explainability, as a part of efforts to embed ethical principles into the design and deployment of AI-enabled systems”.

But the story of neural networks tells us that we’re prone to get further away from that objective in the long run, moderately than closer to it.

Inspired by the human brain

These neural networks could also be complex systems yet they’ve some core principles. Inspired by the human brain, they seek to repeat or simulate types of biological and human pondering. In terms of structure and design they’re, as IBM also explains, comprised of “node layers, containing an input layer, a number of hidden layers, and an output layer”. Within this, “each node, or artificial neuron, connects to a different”. Because they require inputs and data to create outputs they “depend on training data to learn and improve their accuracy over time”. These technical details matter but so too does the want to model these systems on the complexities of the human brain.

Grasping the ambition behind these systems is significant in understanding what these technical details have come to mean in practice. In a 1993 interview, the neural network scientist Teuvo Kohonen concluded that a “self-organising” system “is my dream”, operating “something like what our nervous system is doing instinctively”. As an example, Kohonen pictured how a “self-organising” system, a system that monitored and managed itself, “may very well be used as a monitoring panel for any machine … in every airplane, jet plane, or every nuclear power station, or every automotive”. This, he thought, would mean that in the long run “you might see immediately what condition the system is in”.

Aalto University Archives

The overarching objective was to have a system able to adapting to its surroundings. It can be easy and autonomous, operating within the variety of the nervous system. That was the dream, to have systems that would handle themselves without the necessity for much human intervention. The complexities and unknowns of the brain, the nervous system and the true world would soon come to tell the event and design of neural networks.

‘Something fishy about it’

But jumping back to 1956 and that strange learning machine, it was the hands-on approach that Taylor had taken when constructing it that immediately caught Cowan’s attention. He had clearly sweated over the assembly of the bits and pieces. Taylor, Cowan observed during an interview on his own part within the story of those systems, “didn’t do it by theory, and he didn’t do it on a pc”. Instead, with tools in hand, he “actually built the hardware”. It was a cloth thing, a mix of parts, even perhaps a contraption. And it was “all done with analogue circuitry” taking Taylor, Cowan notes, “several years to construct it and to play with it”. A case of trial and error.

Understandably Cowan desired to become familiar with what he was seeing. He tried to get Taylor to elucidate this learning machine to him. The clarifications didn’t come. Cowan couldn’t get Taylor to explain to him how the thing worked. The analogue neurons remained a mystery. The more surprising problem, Cowan thought, was that Taylor “didn’t really understand himself what was occurring”. This wasn’t only a momentary breakdown in communication between the 2 scientists with different specialisms, it was greater than that.

In an interview from the mid-Nineties, pondering back to Taylor’s machine, Cowan revealed that “to this present day in published papers you may’t quite understand how it really works”. This conclusion is suggestive of how the unknown is deeply embedded in neural networks. The unexplainability of those neural systems has been present even from the basic and developmental stages dating back nearly seven many years.

This mystery stays today and is to be found inside advancing types of AI. The unfathomability of the functioning of the associations made by Taylor’s machine led Cowan to wonder if there was “something fishy about it”.

Long and tangled roots

Cowan referred back to his temporary visit with Taylor when asked in regards to the reception of his own work some years later. Into the Sixties people were, Cowan reflected, “slightly slow to see the purpose of an analogue neural network”. This was despite, Cowan recalls, Taylor’s Nineteen Fifties work on “associative memory” being based on “analog neurons”. The Nobel Prize-winning neural systems expert, Leon N. Cooper, concluded that developments around the applying of the brain model within the Sixties, were regarded “as among the many deep mysteries”. Because of this uncertainty there remained a scepticism about what a neural network might achieve. But things slowly began to alter.

Some 30 years ago the neuroscientist Walter J. Freeman, who was surprised by the “remarkable” range of applications that had been found for neural networks, was already commenting on the proven fact that he didn’t see them as “a fundamentally recent sort of machine”. They were a slow burn, with the technology coming first after which subsequent applications being found for it. This took time. Indeed, to search out the roots of neural network technology we’d head back even further than Cowan’s visit to Taylor’s mysterious machine.

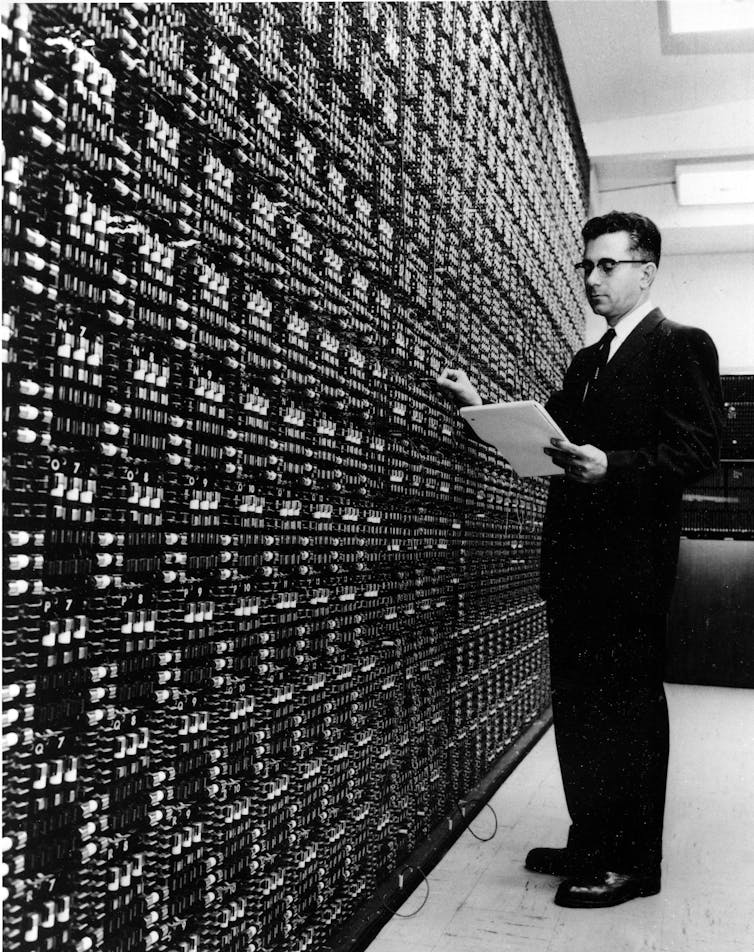

AP Photo/Aerojet-General/W.E. Miller

The neural net scientist James Anderson and the science journalist Edward Rosenfeld have noted that the background to neural networks goes back into the Forties and a few early attempts to, as they describe, “understand the human nervous systems and to construct artificial systems that act the best way we do, no less than slightly bit”. And so, within the Forties, the mysteries of the human nervous system also became the mysteries of computational pondering and artificial intelligence.

Summarising this long story, the pc science author Larry Hardesty has identified that deep learning in the shape of neural networks “have been getting in and out of fashion for greater than 70 years”. More specifically, he adds, these “neural networks were first proposed in 1944 by Warren McCulloch and Walter Pitts, two University of Chicago researchers who moved to MIT in 1952 as founding members of what’s sometimes called the primary cognitive science department”.

Semantic Scholar

Elsewhere, 1943 is usually the given date as the primary 12 months for the technology. Either way, for roughly 70 years accounts suggest that neural networks have moved out and in of vogue, often neglected but then sometimes taking hold and moving into more mainstream applications and debates. The uncertainty endured. Those early developers ceaselessly describe the importance of their research as being neglected, until it found its purpose often years and sometimes many years later.

Moving from the Sixties into the late Seventies we are able to find further stories of the unknown properties of those systems. Even then, after three many years, the neural network was still to search out a way of purpose. David Rumelhart, who had a background in psychology and was a co-author of a set of books published in 1986 that may later drive attention back again towards neural networks, found himself collaborating on the event of neural networks along with his colleague Jay McClelland.

As well as being colleagues that they had also recently encountered one another at a conference in Minnesota where Rumelhart’s talk on “story understanding” had provoked some discussion among the many delegates.

Following that conference McClelland returned with a thought of the best way to develop a neural network which may mix models to be more interactive. What matters here is Rumelhart’s recollection of the “hours and hours and hours of tinkering on the pc”.

We sat down and did all this in the pc and built these computer models, and we just didn’t understand them. We didn’t understand why they worked or why they didn’t work or what was critical about them.

Like Taylor, Rumelhart found himself tinkering with the system. They too created a functioning neural network and, crucially, additionally they weren’t sure how or why it worked in the best way that it did, seemingly learning from data and finding associations.

Mimicking the brain – layer after layer

You may have already got noticed that when discussing the origins of neural networks the image of the brain and the complexity this evokes are never distant. The human brain acted as a type of template for these systems. In the early stages, particularly, the brain – still certainly one of the nice unknowns – became a model for a way the neural network might function.

Shutterstock/CYB3RUSS

So these experimental recent systems were modelled on something whose functioning was itself largely unknown. The neurocomputing engineer Carver Mead has spoken revealingly of the conception of a “cognitive iceberg” that he had found particularly appealing. It is barely the tip of the iceberg of consciousness of which we’re aware and which is visible. The scale and type of the remainder stays unknown below the surface.

In 1998, James Anderson, who had been working for a while on neural networks, noted that when it got here to research on the brain “our major discovery appears to be an awareness that we actually don’t know what is occurring”.

In an in depth account within the Financial Times in 2018, technology journalist Richard Waters noted how neural networks “are modelled on a theory about how the human brain operates, passing data through layers of artificial neurons until an identifiable pattern emerges”. This creates a knock-on problem, Waters proposed, as “unlike the logic circuits employed in a standard software program, there isn’t a way of tracking this process to discover exactly why a pc comes up with a specific answer”. Waters’ conclusion is that these outcomes can’t be unpicked. The application of this kind of model of the brain, taking the info through many layers, signifies that the reply cannot readily be retraced. The multiple layering is an excellent a part of the explanation for this.

Hardesty also observed these systems are “modelled loosely on the human brain”. This brings an eagerness to construct in ever more processing complexity with a purpose to attempt to match up with the brain. The results of this aim is a neural net that “consists of 1000’s and even tens of millions of easy processing nodes which might be densely interconnected”. Data moves through these nodes in just one direction. Hardesty observed that an “individual node may be connected to several nodes within the layer beneath it, from which it receives data, and several other nodes within the layer above it, to which it sends data”.

Models of the human brain were an element of how these neural networks were conceived and designed from the outset. This is especially interesting once we consider that the brain was itself a mystery of the time (and in some ways still is).

‘Adaptation is the entire game’

Scientists like Mead and Kohonen desired to create a system that would genuinely adapt to the world wherein it found itself. It would reply to its conditions. Mead was clear that the worth in neural networks was that they might facilitate this kind of adaptation. At the time, and reflecting on this ambition, Mead added that producing adaptation “is the entire game”. This adaptation is required, he thought, “due to the nature of the true world”, which he concluded is “too variable to do anything absolute”.

This problem needed to be reckoned with especially as, he thought, this was something “the nervous system found out a protracted time ago”. Not only were these innovators working with a picture of the brain and its unknowns, they were combining this with a vision of the “real world” and the uncertainties, unknowns and variability that this brings. The systems, Mead thought, needed to give you the chance to reply and adapt to circumstances instruction.

Around the identical time within the Nineties, Stephen Grossberg – an authority in cognitive systems working across maths, psychology and bioemedical engineering – also argued that adaptation was going to be the vital step in the long run. Grossberg, as he worked away on neural network modelling, thought to himself that it’s all “about how biological measurement and control systems are designed to adapt quickly and stably in real time to a rapidly fluctuating world”. As we saw earlier with Kohonen’s “dream” of a “self-organising” system, a notion of the “real world” becomes the context wherein response and adaptation are being coded into these systems. How that real world is known and imagined undoubtedly shapes how these systems are designed to adapt.

Hidden layers

As the layers multiplied, deep learning plumbed recent depths. The neural network is trained using training data that, Hardesty explained, “is fed to the underside layer – the input layer – and it passes through the succeeding layers, getting multiplied and added together in complex ways, until it finally arrives, radically transformed, on the output layer”. The more layers, the greater the transformation and the greater the space from input to output. The development of Graphics Processing Units (GPUs), in gaming for example, Hardesty added, “enabled the one-layer networks of the Sixties and the 2 to three- layer networks of the Eighties to blossom into the ten, 15, and even 50-layer networks of today”.

Neural networks are getting deeper. Indeed, it’s this adding of layers, in line with Hardesty, that’s “what the ‘deep’ in ‘deep learning’ refers to”. This matters, he proposes, because “currently, deep learning is chargeable for the best-performing systems in almost every area of artificial intelligence research”.

But the mystery gets deeper still. As the layers of neural networks have piled higher their complexity has grown. It has also led to the expansion in what are known as “hidden layers” inside these depths. The discussion of the optimum variety of hidden layers in a neural network is ongoing. The media theorist Beatrice Fazi has written that “due to how a deep neural network operates, counting on hidden neural layers sandwiched between the primary layer of neurons (the input layer) and the last layer (the output layer), deep-learning techniques are sometimes opaque or illegible even to the programmers that originally set them up”.

As the layers increase (including those hidden layers) they turn into even less explainable – even, because it seems, again, to those creating them. Making the same point, the distinguished and interdisciplinary recent media thinker Katherine Hayles also noted that there are limits to “how much we are able to know in regards to the system, a result relevant to the ‘hidden layer’ in neural net and deep learning algorithms”.

Pursuing the unexplainable

Taken together, these long developments are a part of what the sociologist of technology Taina Bucher has called the “problematic of the unknown”. Expanding his influential research on scientific knowledge into the sphere of AI, Harry Collins has identified that the target with neural nets is that they could be produced by a human, initially no less than, but “once written this system lives its own life, because it were; without huge effort, exactly how this system is working can remain mysterious”. This has echoes of those long-held dreams of a self-organising system.

I’d add to this that the unknown and possibly even the unknowable have been pursued as a fundamental a part of these systems from their earliest stages. There is an excellent likelihood that the greater the impact that artificial intelligence involves have in our lives the less we’ll understand how or why.

But that doesn’t sit well with many today. We wish to know the way AI works and the way it arrives at the selections and outcomes that impact us. As developments in AI proceed to shape our knowledge and understanding of the world, what we discover, how we’re treated, how we learn, devour and interact, this impulse to grasp will grow. When it involves explainable and transparent AI, the story of neural networks tells us that we’re prone to get further away from that objective in the long run, moderately than closer to it.

This article was originally published at theconversation.com