Researchers on the University of Surrey have developed a way to convert photos of dogs into detailed 3D models.

The training material? Not real dogs, but computer-generated images from the virtual world of the hit game Grand Theft Auto V (GTA V).

Moira Shooter, a doctoral student involved within the study, shared by the study“Our model was trained on CGI dogs – but we were in a position to use it to create 3D skeletal models from photos of real animals. This could enable conservationists to discover injured wildlife or help artists create more realistic animals within the metaverse.”

Previous methods of teaching AI 3D structures include using real photos alongside data in regards to the objects’ actual 3D positions, often obtained through motion capture technology.

However, when these techniques are applied to dogs or other animals, there is commonly an excessive amount of movement to trace and it’s difficult to get dogs to behave long enough.

To create their dog dataset, the researchers modified GTA V’s code to interchange the human characters with canine avatars through a process generally known as “modding.”

The researchers produced 118 videos that recorded these virtual dogs performing various actions—sitting, walking, barking, and running—under different environmental conditions.

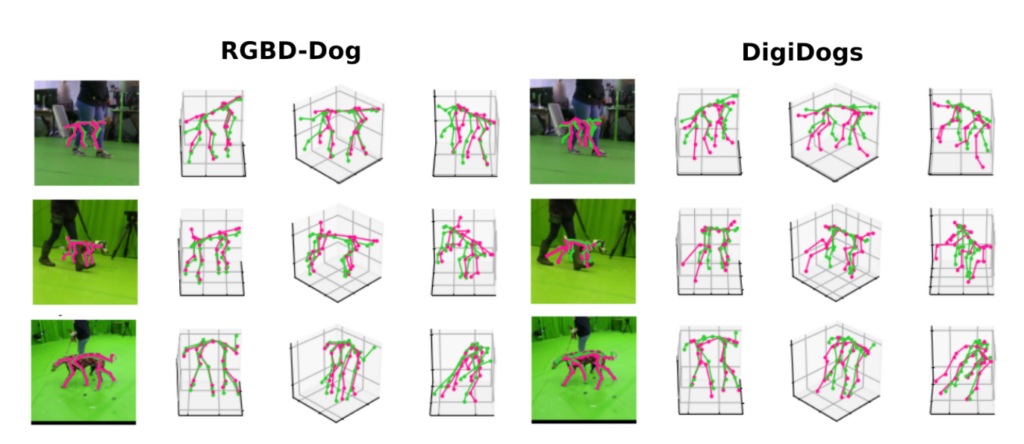

This culminated within the creation of “DigiDogs,” a large database of 27,900 images of dog movements captured in ways that may not have been possible with real-world data collection.

With the dataset in hand, the subsequent steps leveraged Meta’s DINOv2 AI model for its strong generalization capabilities and optimized it with DigiDogs to accurately predict 3D poses from single-view RGB images.

The researchers showed that using the DigiDogs dataset for training resulted in additional accurate and lifelike 3D dog poses than those trained on real-world datasets attributable to the range of dog appearances and actions captured.

The improved performance of the model was confirmed by thorough qualitative and quantitative evaluations.

Although this study represented a significant advance in 3D animal modeling, the team admits that there continues to be a whole lot of work to be done, particularly in improving the best way the model predicts the depth aspect of the pictures (the Z coordinate).

Shooter described the potential impact of her work: “3D poses contain so rather more information than 2D photos. From ecology to animation, this neat solution has so many uses.”

The Paper won one of the best paper award on the IEEE/CVF Winter Conference on Applications of Computer Vision.

It opens the door to higher model performance in areas comparable to wildlife conservation and 3D object rendering for VR.

This article was originally published at dailyai.com