Searching for the most recent Hugging Face statistics?

Hugging Face is a platform for AI where users collaborate on machine learning projects. It hosts an open-source platform for training and deploying models. With over 200,000 models, it covers various fields like computer vision and natural language processing.

In this post, we are going to share all of the necessary statistical data you must learn more about Hugging Face.

Before we jump to the important thing statistics of Hugging Face, here’s a fast history of Hugging Face that it’s best to not miss out on.

What is Hugging Face, Who Built It, Why, and When

Hugging Face, a French-American company headquartered in New York City, was established in 2016 by French entrepreneurs Clément Delangue, Julien Chaumond, and Thomas Wolf.

Initially, the corporate developed a chatbot app targeting teenagers before transitioning right into a machine learning platform after open-sourcing the chatbot’s model.

The Hugging Face Hub, the corporate’s platform, acts as a collaborative space where users can develop, train, and deploy NLP and ML models using open-source code.

Hugging Face simplifies model development by providing pre-trained models that users can fine-tune for specific tasks, thus democratizing AI and making it more accessible to developers.

It offers user-friendly tokenizers for text preprocessing and an enormous repository of NLP datasets through the Hugging Face Datasets library, supporting data scientists, researchers, and ML engineers of their projects.

Additionally, Hugging Face is renowned for its transformers library tailored for natural language processing applications.

What Does Hugging Face Mean, and Why Do They Use an Emoji?

“Hugging Face” was chosen since the founders desired to be the primary company to go public with an emoji quite than the standard three-letter ticker symbol.

They selected the cuddling face emoji since it was their favorite emoji, they usually thought it could be a memorable and unique name for his or her company.

Well, this went well and the Hugging Face community has embraced the name, and it has develop into a recognizable brand within the machine learning and data science space.

Key Hugging Face Statistics at Glance

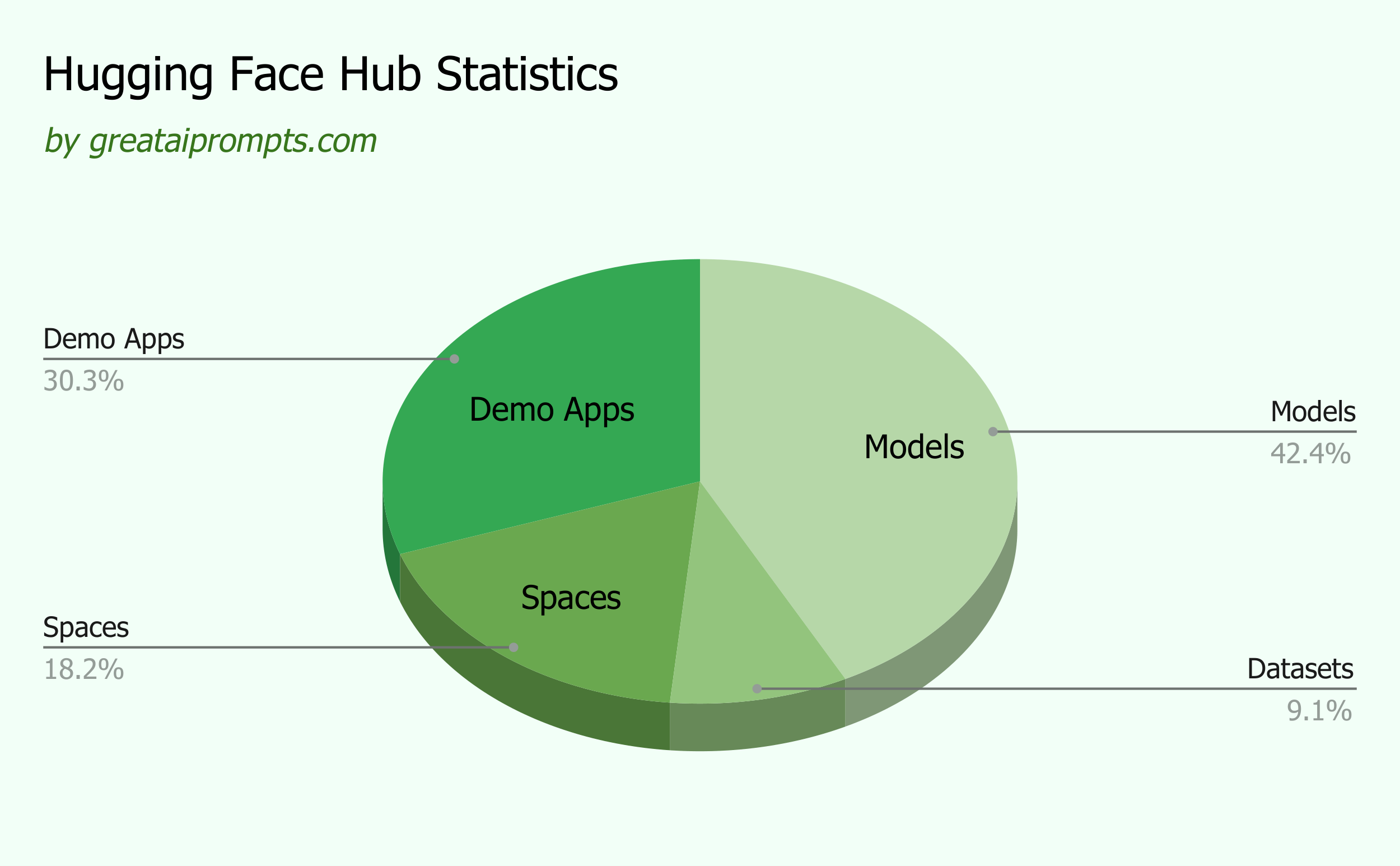

- Over 1 million models, datasets, and apps hosted. In general it has 350k models, 75k datasets, and 150k demo apps. ()

- Completed a $235 million Series D investment in 2023, reaching a $4.5 billion valuation. ()

- Total funding raised: $395.2 million across six rounds since 2016. ()

- Revenue was under $10 million in 2021, with a projection of $30 to $50 million in 2023. ()

- Used by over 10,000 corporations for AI and machine learning development. ()

- Released BLOOM, a 176 billion-parameter language model. ()

- Has over 1,000 paying customers, including Intel, Pfizer, and Bloomberg. ()

- Competes directly with H2O.ai, spaCy, and not directly with OpenAI. ()

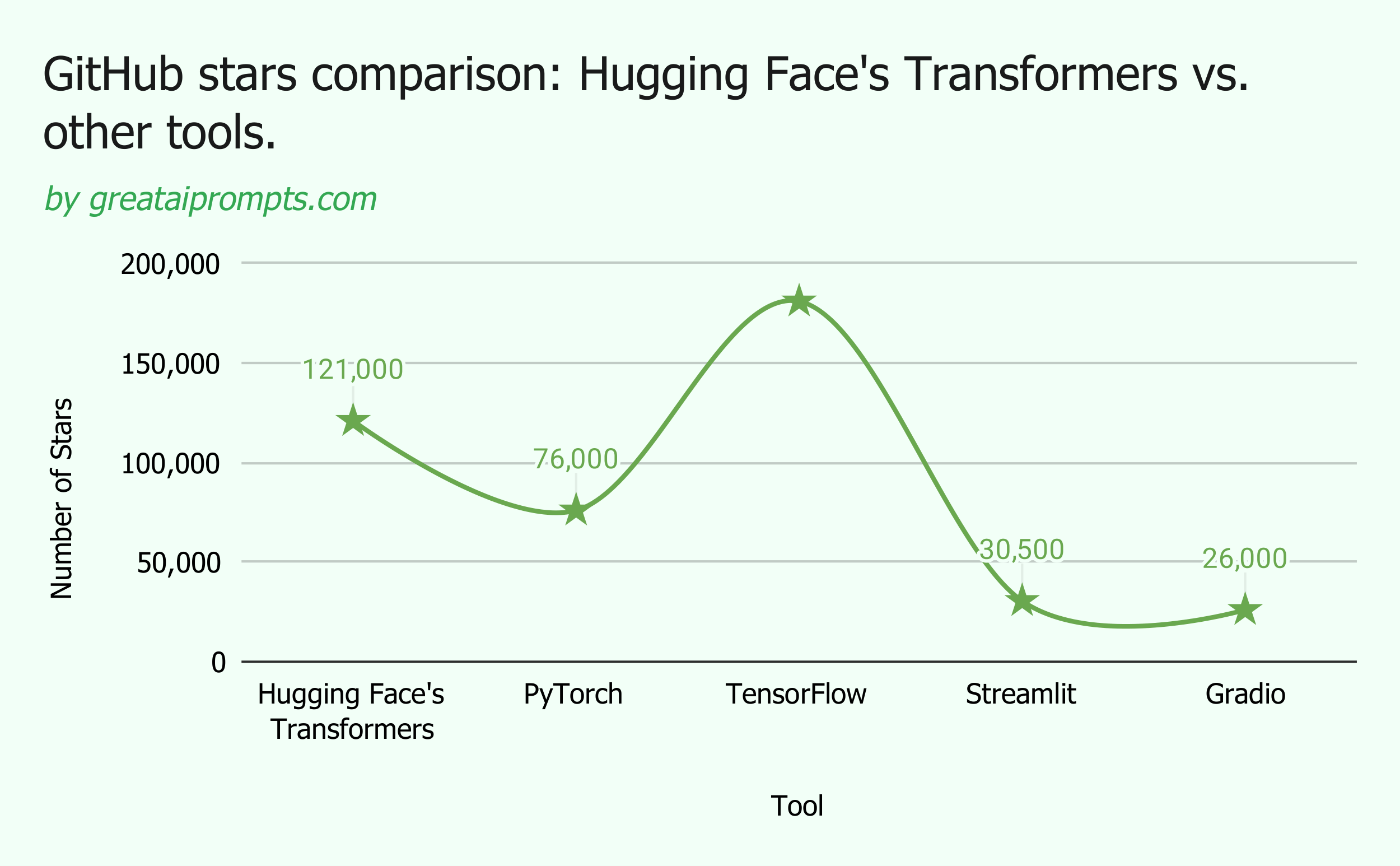

- Transformers library has over 100,000 stars on GitHub. ()

- Website receives 18.9 Million visits in monthly. ()

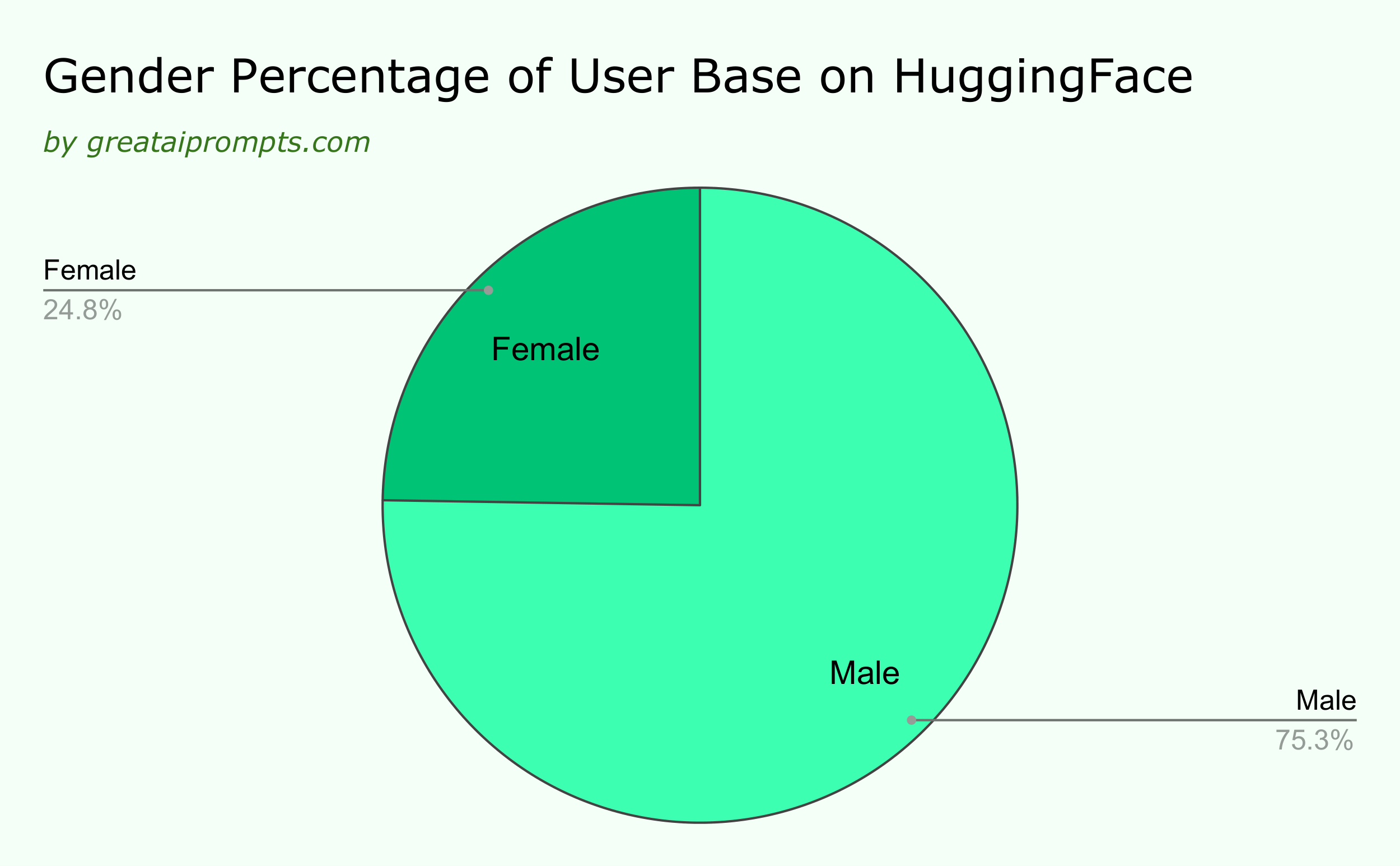

- 75.25% of website visitors are male; 24.75% are female. ()

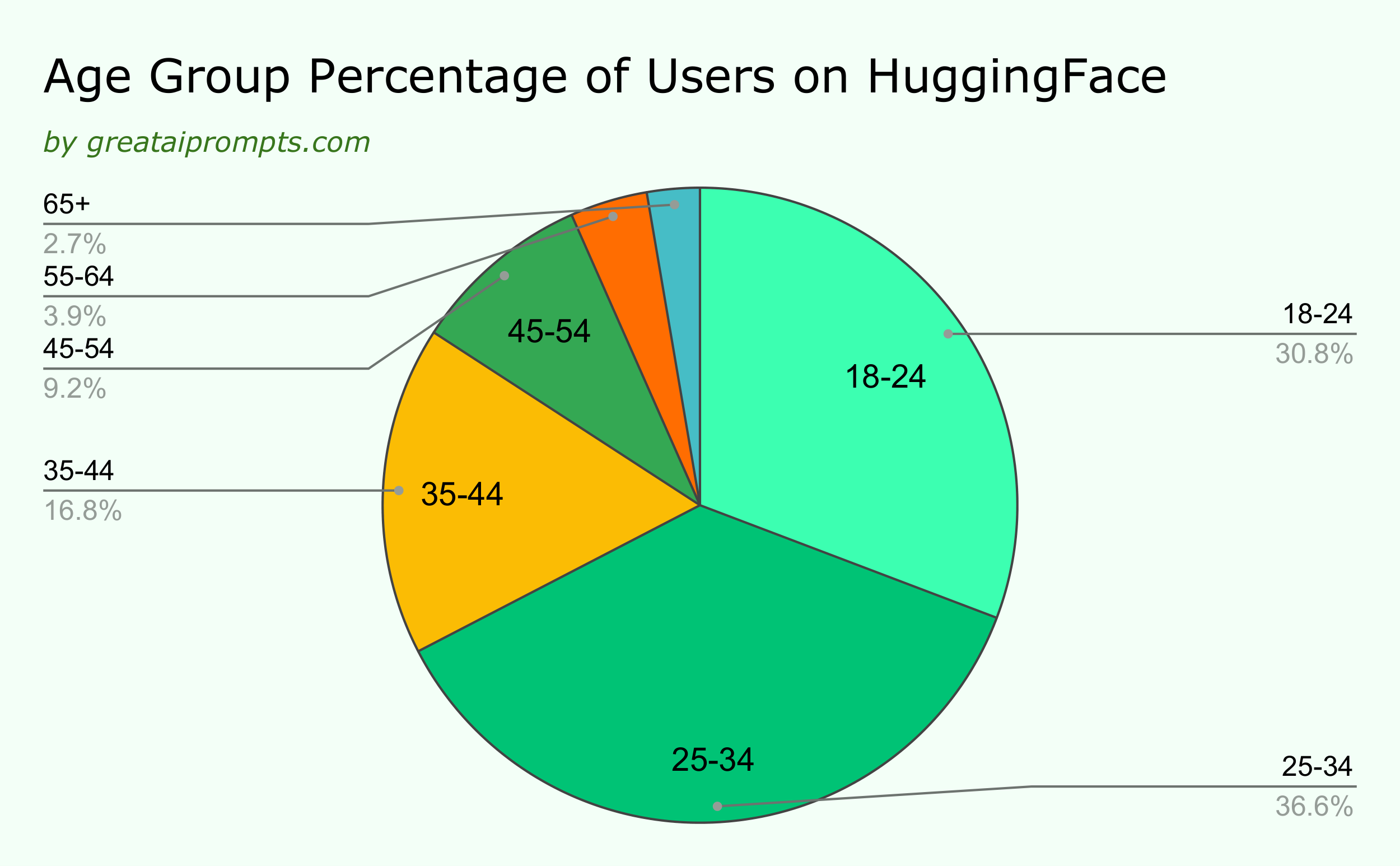

- Age group 25-34 makes up 36.87% of the user base. ()

- Hosts over 300k models, 250k datasets, and 250k spaces.

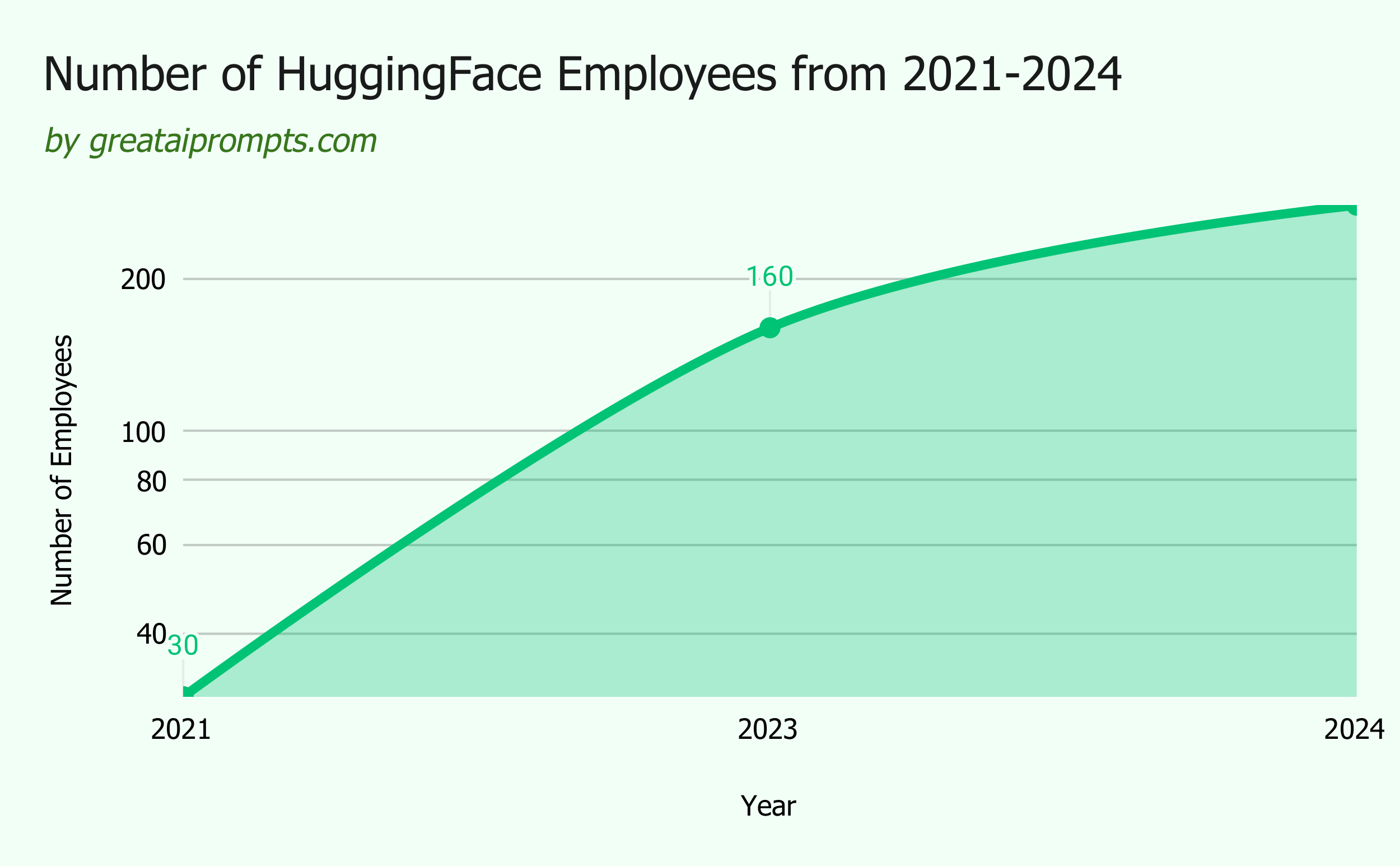

- 170 employees as of 2023, up from 30 in 2021. ()

- Hugging Face Pro offered at $25/month. ()

- Largest model contributions from “Other” category: 129,157 models.

- IBM contributed over 200 open models and datasets. ()

- Fake news detector model has as much as 95% accuracy. ()

Website Traffic and User Demographics

How Much Website Traffic Hugging Face Attracts?

Hugging Face, a distinguished AI platform and community, has maintained consistent traffic levels recently.

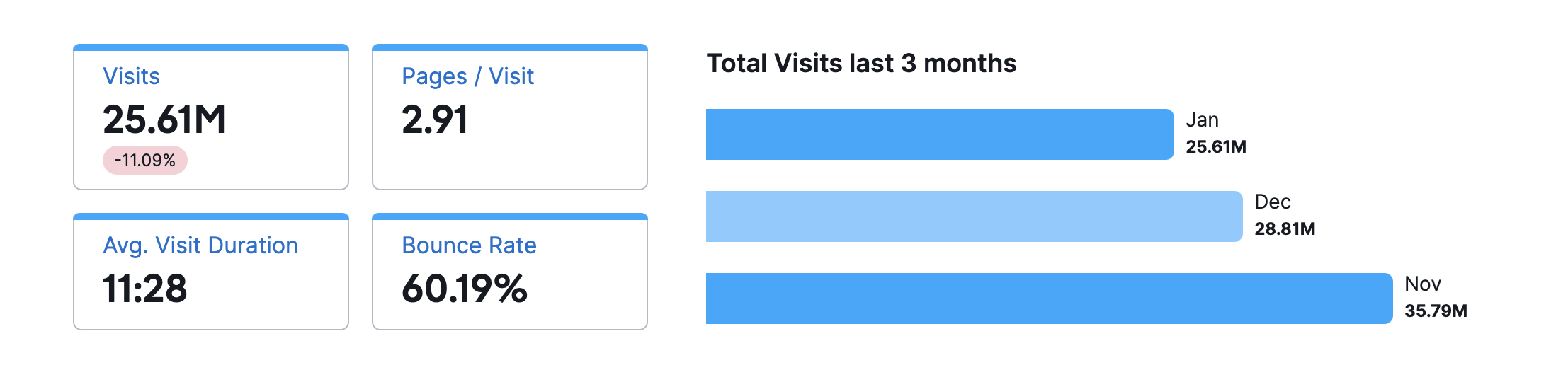

In January 2024, the web site attracted 28.81 million visits, with users spending a median of 10 minutes and 39 seconds per session. However, there was a slight decrease in traffic in comparison with November, amounting to -19.5%.

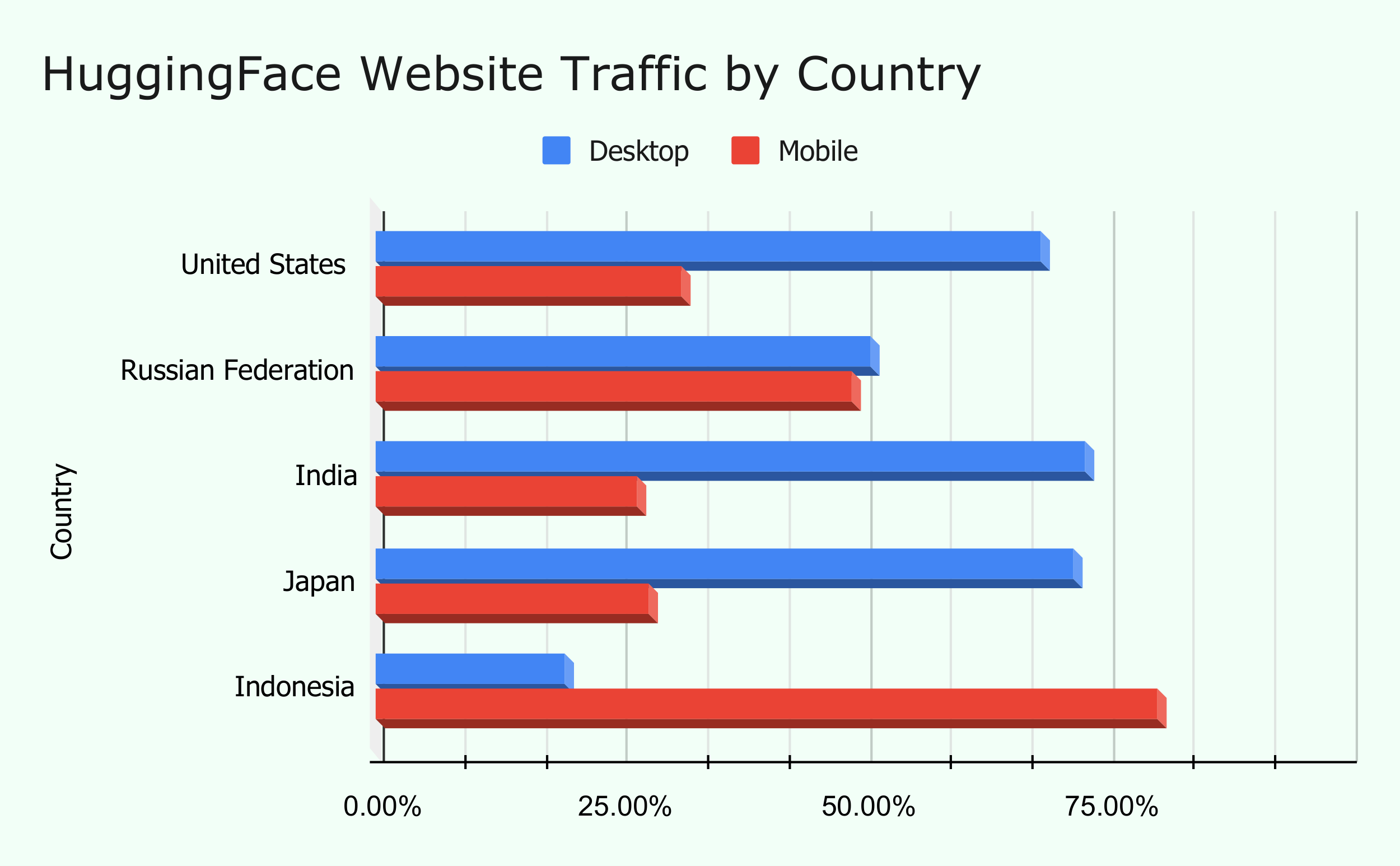

The primary audience for Hugging Face is centered within the United States, with significant followings in Russia, India, Japan, and Indonesia.

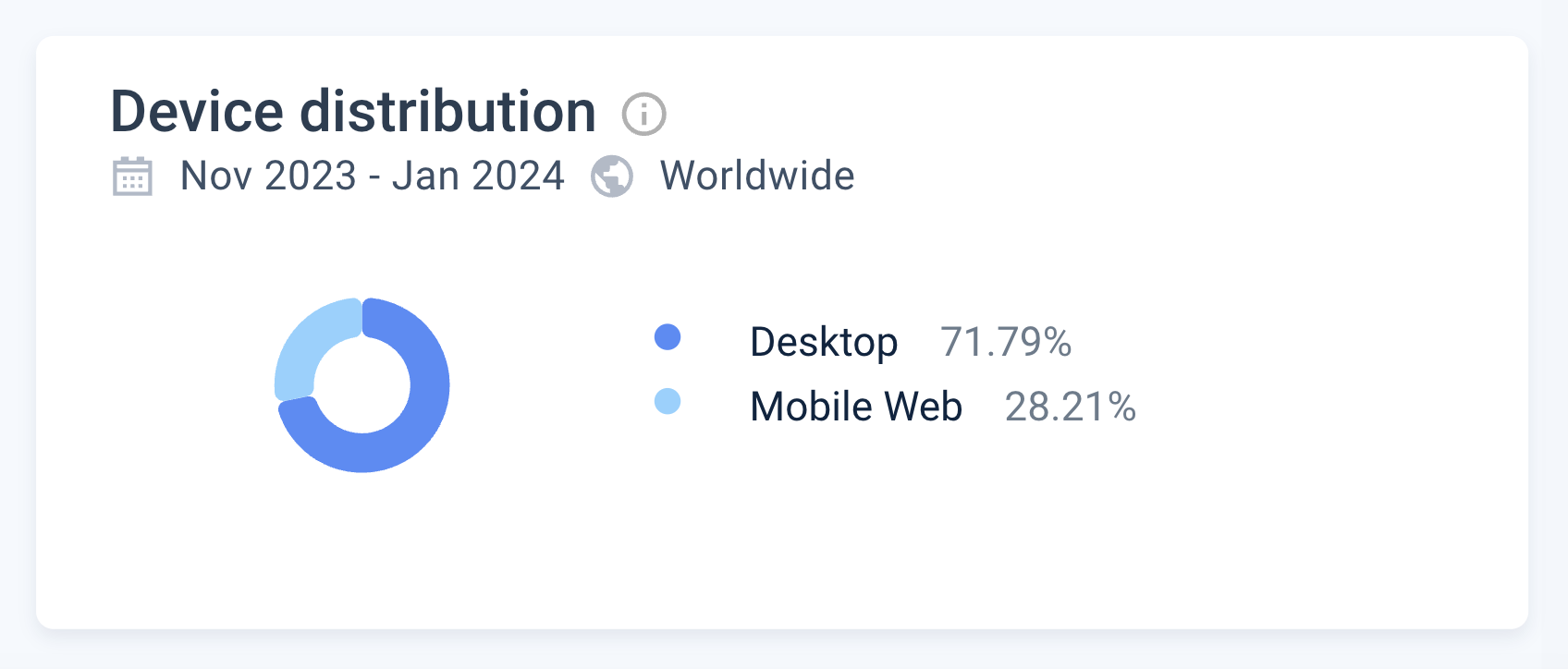

Traffic to the web site is diverse when it comes to device usage, with desktops representing 68.03% of visits, followed by mobile devices at 31.97%, and tablets at 7.22%.

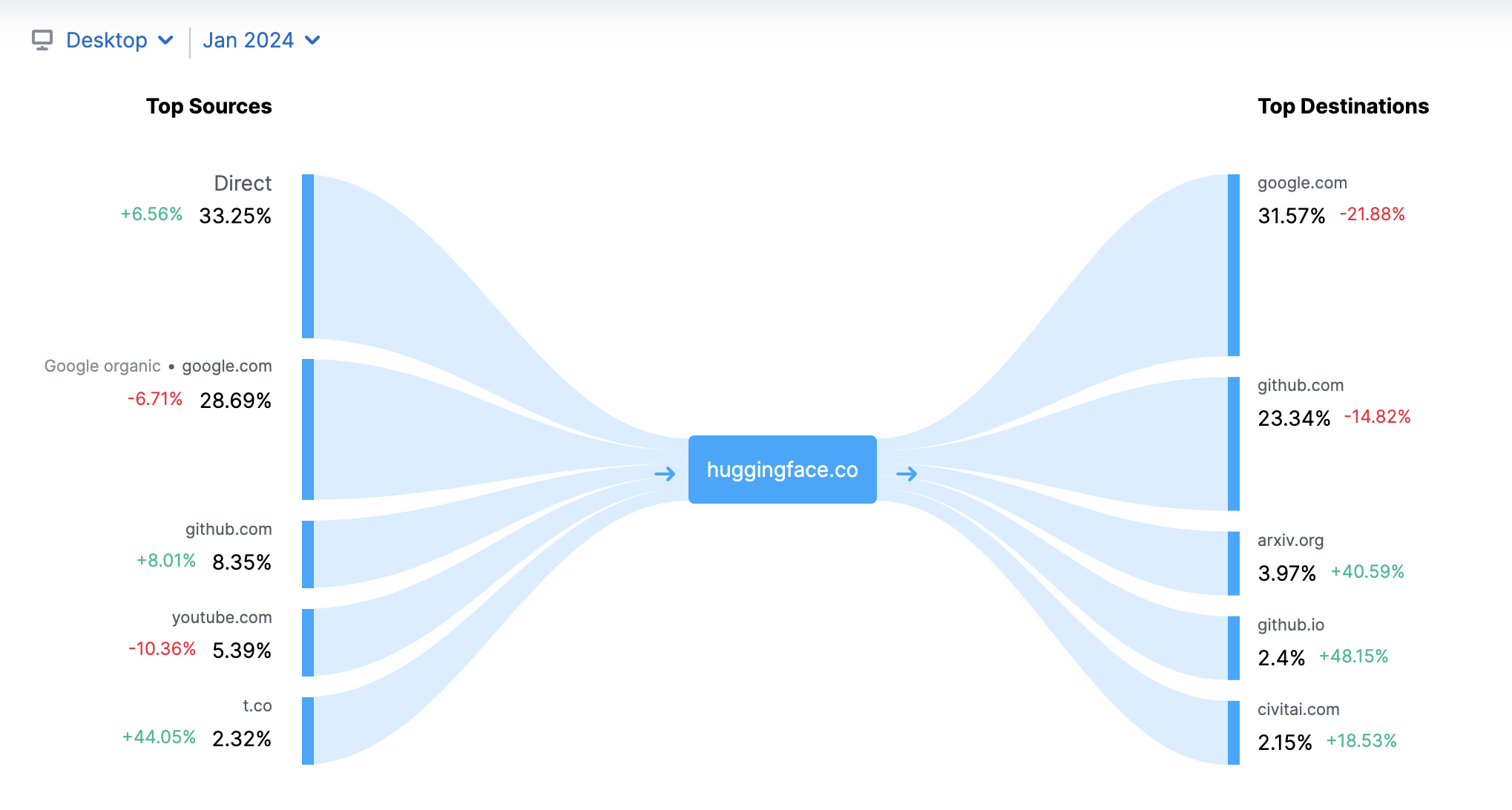

In terms of selling channels, direct traffic holds the biggest share at 45.06%, closely followed by organic search at 28.67%. Referrals, social media, display ads, and paid searches comprise the rest of the web site’s traffic sources.

What is the User Base of Hugging Face?

Hugging Face attracts a various user base consisting mainly of AI researchers, data scientists, and developers.

As of 2023, the platform boasts greater than 1.2 million registered users, with males making up 75.25% and females 24.75% of the whole.

Most users fall throughout the 25-34 age bracket, accounting for 36.87% of the user base, closely followed by 18-24-year-olds at 28.26%. In total, users aged 18-44 make up 83.03% of the platform’s users.

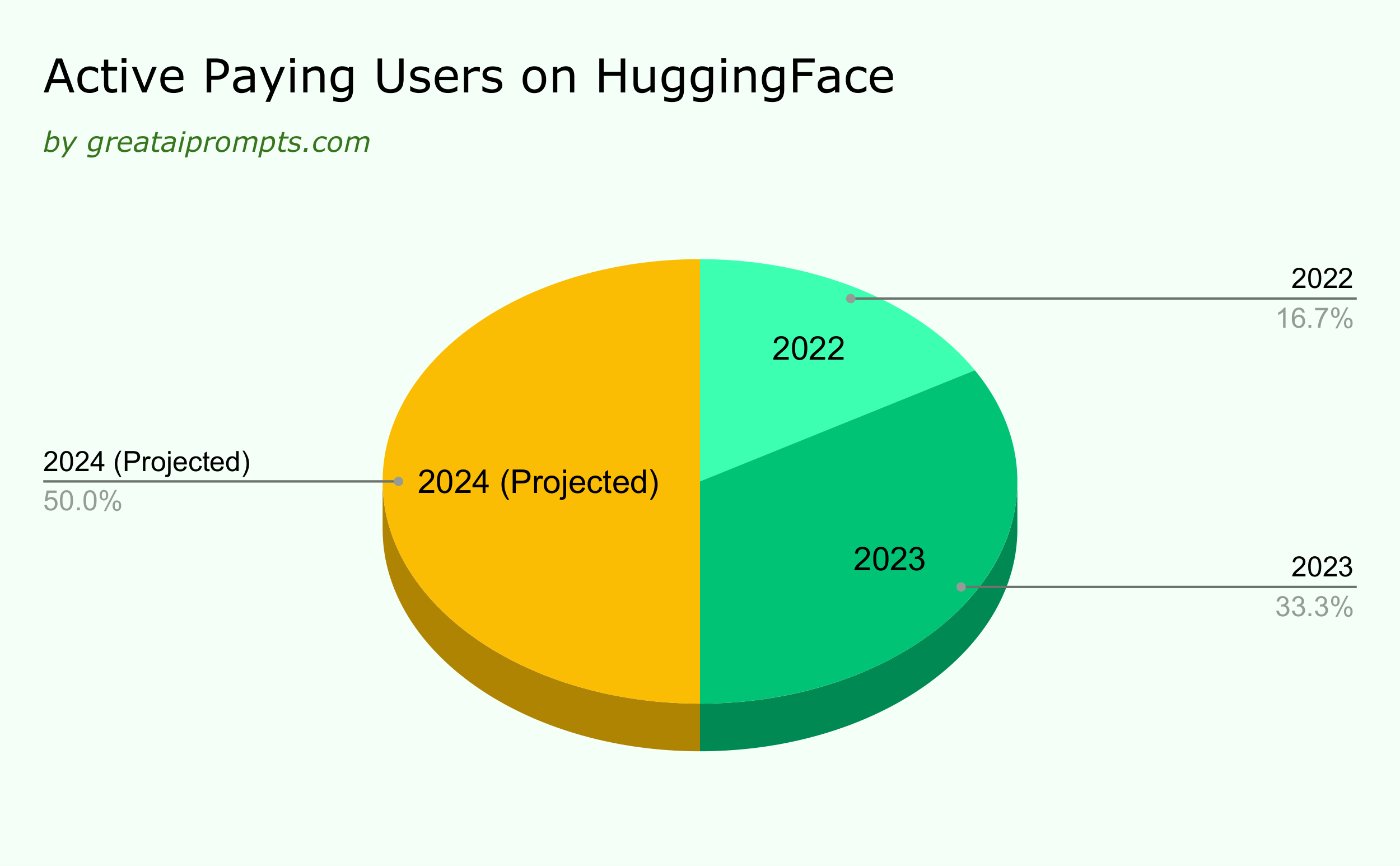

Active Paying Users on Hugging Face

Hugging Face boasts greater than 1,000 lively paying users, which include distinguished firms corresponding to Intel, Pfizer, Bloomberg, and eBay. By 2025, Hugging Face’s projected lively paying user base will increase by nearly 1500.

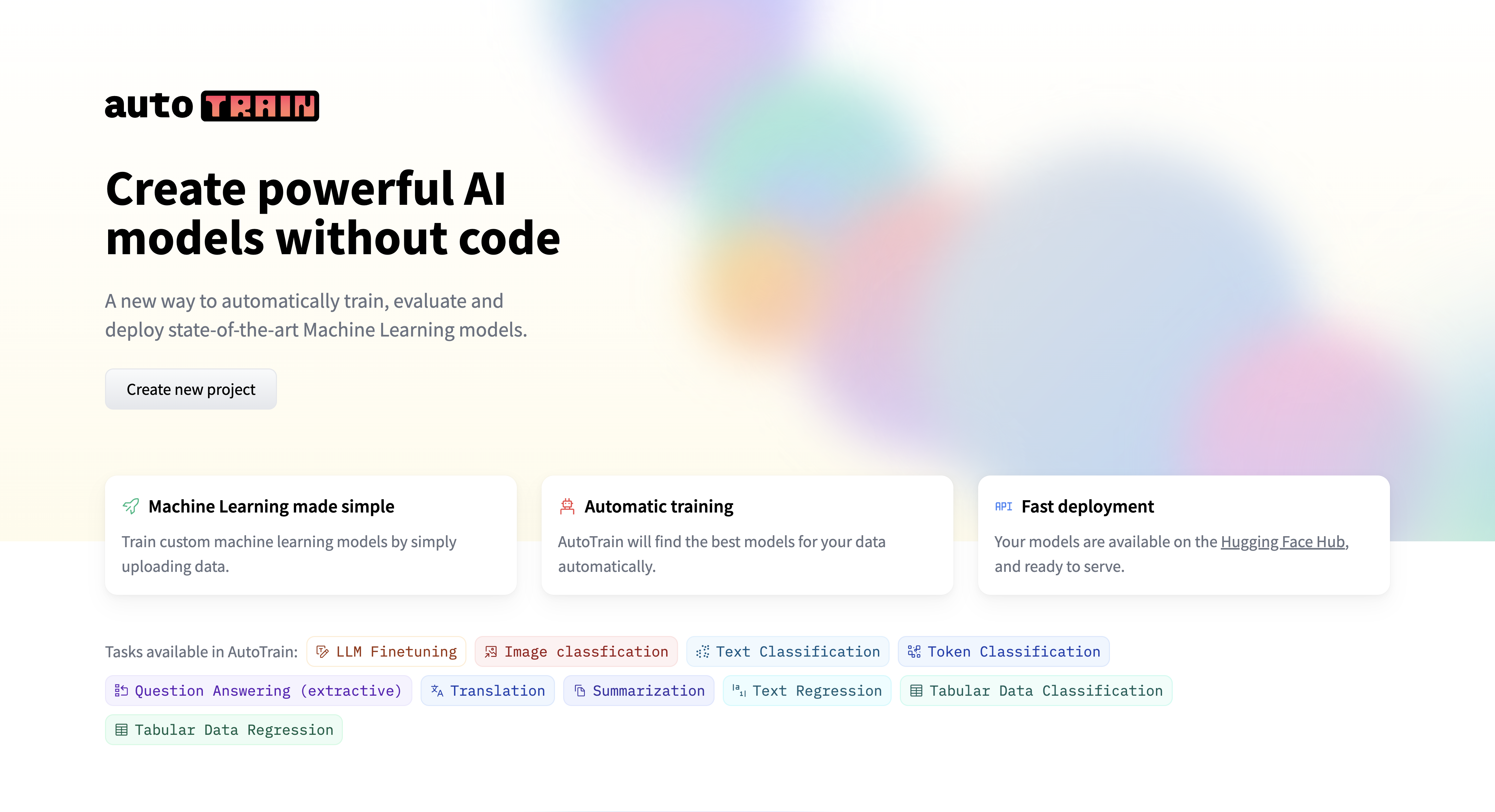

The platform provides services like AutoTrain, Spaces, and Inference Endpoints, with charges billed on to the linked bank card.

Moreover, Hugging Face collaborates with cloud providers like AWS and Azure to enable seamless integration into customers’ preferred cloud setups.

Geographical Distribution of Hugging Face

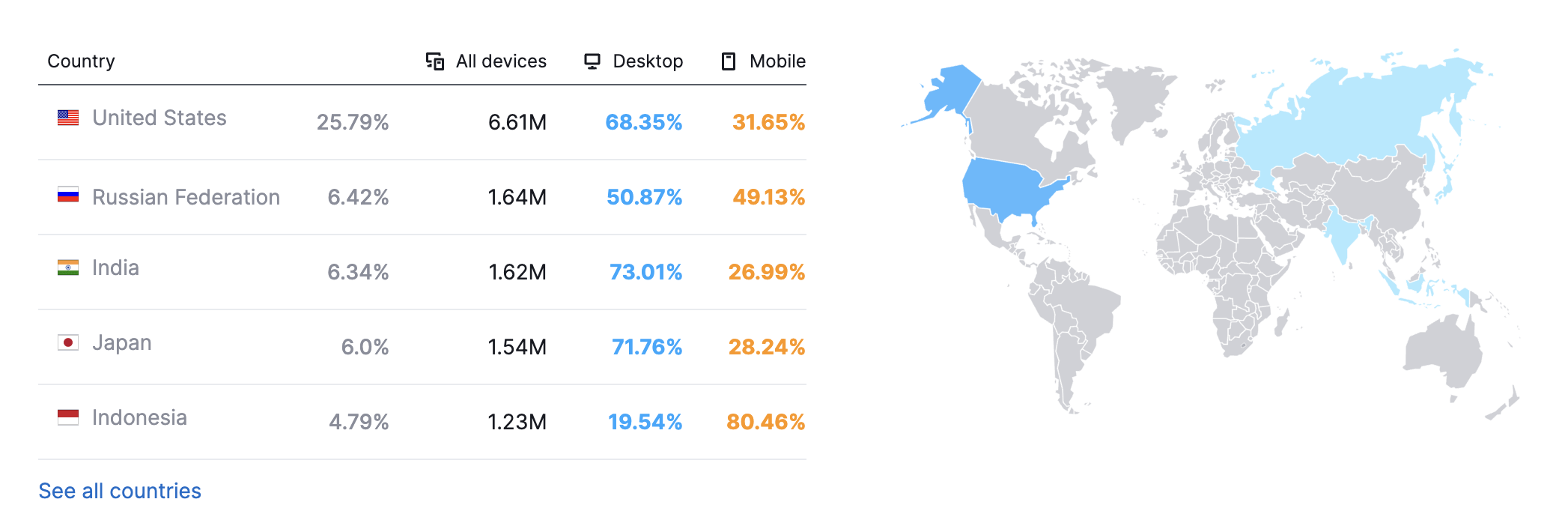

Hugging Face attracts users from diverse geographical locations, with the United States, India, and Russia emerging as pivotal hubs for its core audience.

Analyzing website traffic, it’s evident that the United States comprises 25.06% of tourists, trailed by India at 10.44%, and Russia at 7.06%.

| Country | All Devices | Desktop | Mobile |

|---|---|---|---|

| United States | 6.61M | 68.35% | 31.65% |

| Russian Federation | 1.64M | 50.87% | 49.13% |

| India | 1.62M | 73.01% | 26.99% |

| Japan | 1.54M | 71.76% | 28.24% |

| Indonesia | 1.23M | 19.54% | 80.46% |

Source

Interestingly, device preferences vary across regions, with desktop usage dominating within the United States (68.03%), while mobile devices are favored in India (92.28%) and Russia (86.48%).

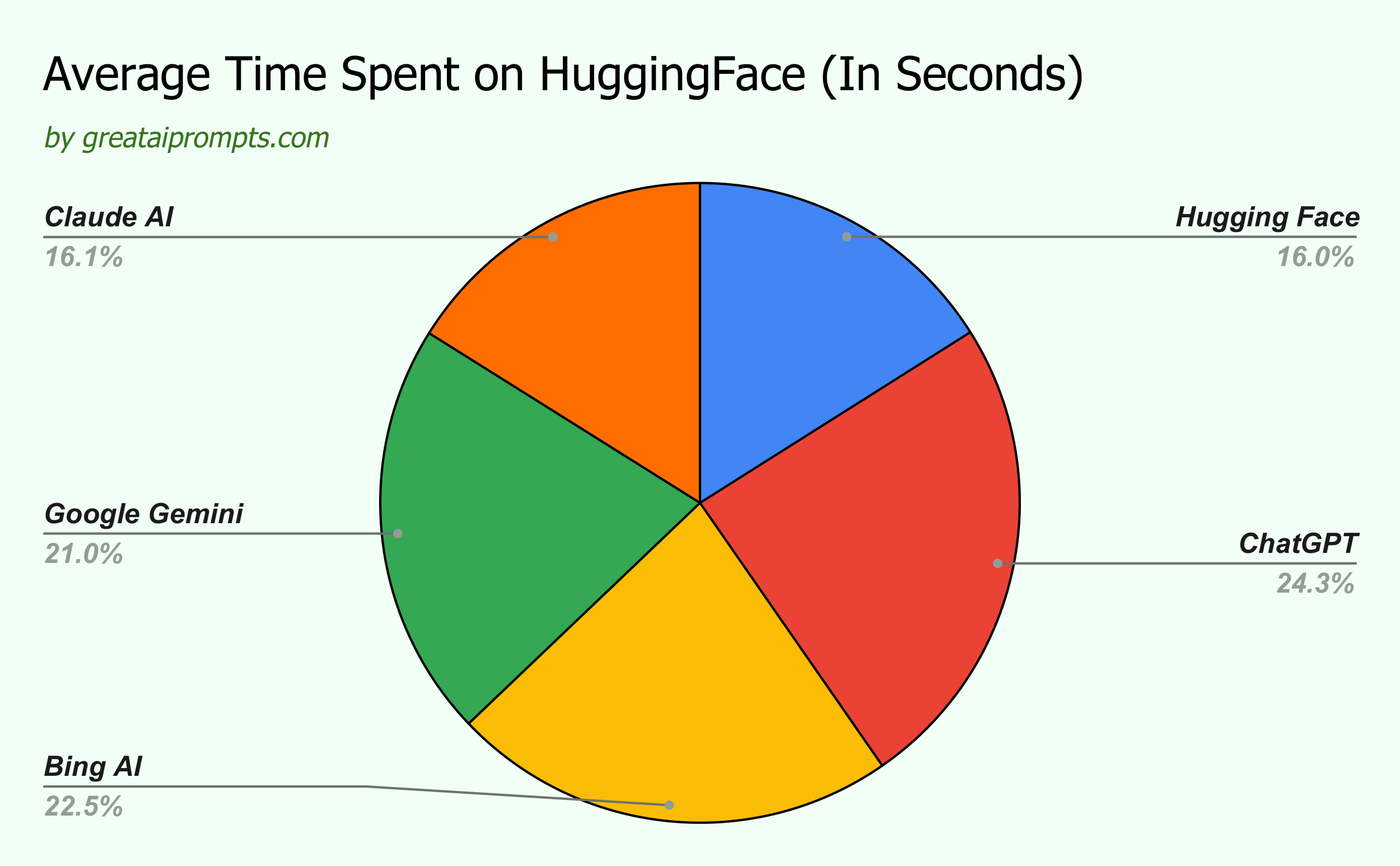

Average Time Users Spend on Hugging Face

Users spend a median of 4 minutes and 59 seconds on the Hugging Face website, which is kind of low in comparison with OpenAI’s ChatGPT, Character AI, Claude, or Bing AI.

Funding and Valuation Statistics

Now, let’s have a look at a few of Hugging Face’s key funding, revenue, and valuation statistics.

Revenue and Valuation

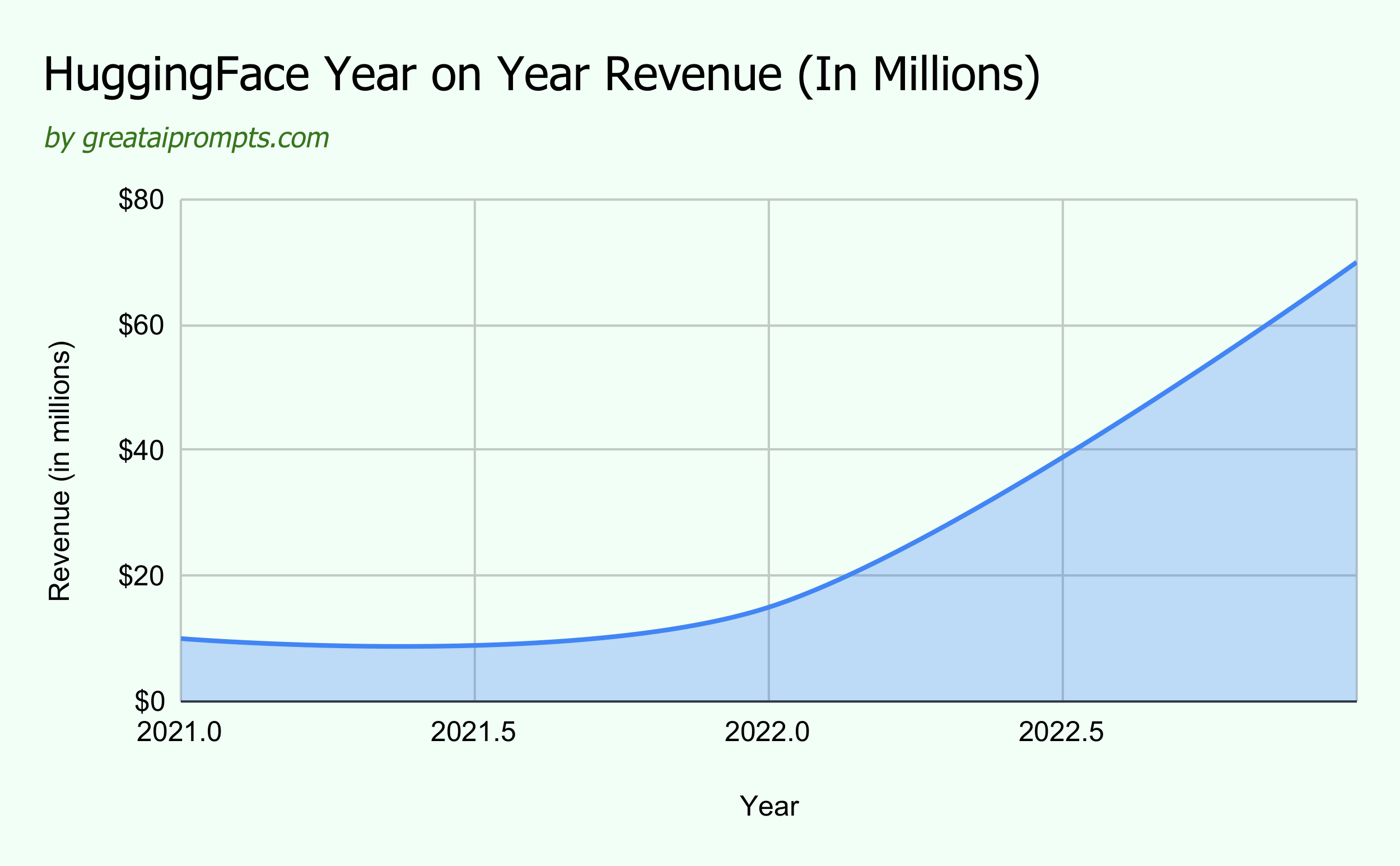

According to estimates from Sacra, Hugging Face achieved $70 million in annual recurring revenue (ARR) by the top of 2023, showing a powerful 367% growth in comparison with the previous 12 months.

This surge in revenue was mainly as a consequence of profitable consulting contracts with major AI corporations like Nvidia, Amazon, and Microsoft. The company’s revenue model includes paid individual ($9/month) and team plans ($20/month), with a good portion of revenue coming from enterprise-level services.

Regarding valuation, Hugging Face reached a valuation of $4.5 billion after securing $235 million in funding from investors corresponding to Google, Amazon, Nvidia, Intel, Salesforce, and others.

This substantial valuation demonstrates the market’s confidence in Hugging Face’s progressive AI software solutions and hosting services.

| Metric | Value |

|---|---|

| Revenue (2021) | $10 million |

| Valuation (May 2022) | $2 billion |

| Latest Valuation (August 2023) | $4.5 billion |

| Total Funding | $235 million |

Funding

As of 2023, Hugging Face has successfully raised $395 million in funding. This funding has been crucial in supporting the corporate’s growth initiatives, product development, and expansion into recent markets.

Notable investors like Google, Nvidia, and other tech giants have expressed strong support for Hugging Face’s vision and offerings.

Does Hugging Face Make Money?

In 2023, Hugging Face reached an annual recurring revenue (ARR) of $70 million, showing a remarkable 367% increase from the previous 12 months.

This surge in revenue was mainly driven by lucrative consulting contracts with leading AI corporations corresponding to Nvidia, Amazon, and Microsoft.

Here are the corporate’s revenue statistics:

| Year | Revenue (ARR) | Growth Rate (y/y) |

|---|---|---|

| 2022 | $10 million | N/A |

| 2023 | $70 million | 367% |

How Hugging Face Makes Money (Revenue Model)

Hugging Face generates revenue through various channels, including subscription plans, enterprise solutions, and cloud services. Here’s a breakdown of how Hugging Face earns money:

- Subscription Plans:

- Hugging Face offers each individual and team subscription plans priced at $9 monthly and $20 monthly, respectively.

- These plans grant users access to premium features like private dataset viewing, inference capabilities, and early access to recent features.

- Enterprise Solutions:

- Cloud Services:

- Through its cloud platform, Hugging Face offers NLP and AI services corresponding to model hosting, inference, and optimization.

- Users are billed based on their usage of those services, including fees for model hosting and optimization.

- Market Positioning:

Market Position and Competition

Direct and Indirect Competitors

Hugging Face competes within the rapidly growing generative AI market, particularly in large language and vision models.

It isn’t a direct competitor to ChatGPT or Google’s Bard but competes through strategic partnerships and the commercialization of AI models for enterprise use.

For instance, Hugging Face’s collaboration with AWS goals to make deploying generative AI applications more accessible to developers.

Based on the info from Similarweb, here’s a table outlining Hugging Face’s top competitors, their industry focus, and the whole visits they received in December 2023:

| Rank | Competitor | Industry Focus | Total Visits (December 2023) | Global Rank |

|---|---|---|---|---|

| 1 | paperswithcode.com | Machine learning research and implementation | 2.2M | #29,926 |

| 2 | openai.com | Advanced AI systems (GPT-4) | 1.6B | #25 |

| 3 | civitai.com | Stable diffusion AI art models | 24.2M | #1,301 |

| 4 | wandb.ai | Machine learning model tracking and comparison | 1.6M | #32,001 |

| 5 | github.com | Software development and open source collaboration | 424.8M | #78 |

| 6 | raw.githubusercontent.com | – | 7.7M | #11,474 |

| 7 | stablediffusionweb.com | Stable diffusion online demo for art creation | 3.5M | #19,080 |

| 8 | notion.so | Workspace productivity tools | 141.2M | #200 |

| 9 | replicate.com | Cloud API for machine learning models | 7.7M | #7,370 |

| 10 | stability.ai | AI technology development | 3.5M | #19,790 |

Ways Hugging Face Differentiates Itself from Competitors

- Open-Source Community: Hugging Face fosters an open-source community platform, enabling developers to collaborate on and share models, datasets, and APIs for NLP tasks. This stands in contrast to closed-source platforms like OpenAI and Perplexity. (Source)

- Model-Agnostic Approach: Hugging Face’s approach allows users to utilize high-quality closed-source models to coach their very own low-cost, open-source models. This encourages flexibility amongst ML developers, who can mix and match models to seek out the very best solution for his or her specific needs.

- Collaborative Workspace: Hugging Face provides a collaborative workspace for ML developers, facilitating teamwork on projects, sharing insights, and refining one another’s work. (Source)

- Diverse Model Library: Hugging Face offers a various library of pre-trained models for various tasks corresponding to text classification, sentiment evaluation, and translation. This extensive library distinguishes it from competitors focused solely on large language models.

- Cloud Platform: Hugging Face operates a cloud platform offering NLP and AI services like model hosting, inference, and optimization. This allows users to simply deploy, manage, and scale their NLP models in a cloud environment. (Source)

- Enterprise Solutions: Hugging Face delivers enterprise solutions leveraging its NLP and AI technology, including custom model training, deployment, integration services, and premium features and support.

Other Important Statistical Data for Hugging Face

There are many other statistics you must learn about Hugging Face aside from traffic, revenue, demographics, etc. Let’s have a have a look at them:

What Products Hugging Face Has Built?

In 2020, they introduced products like Autotrain and Inference API, targeting enterprise clients.

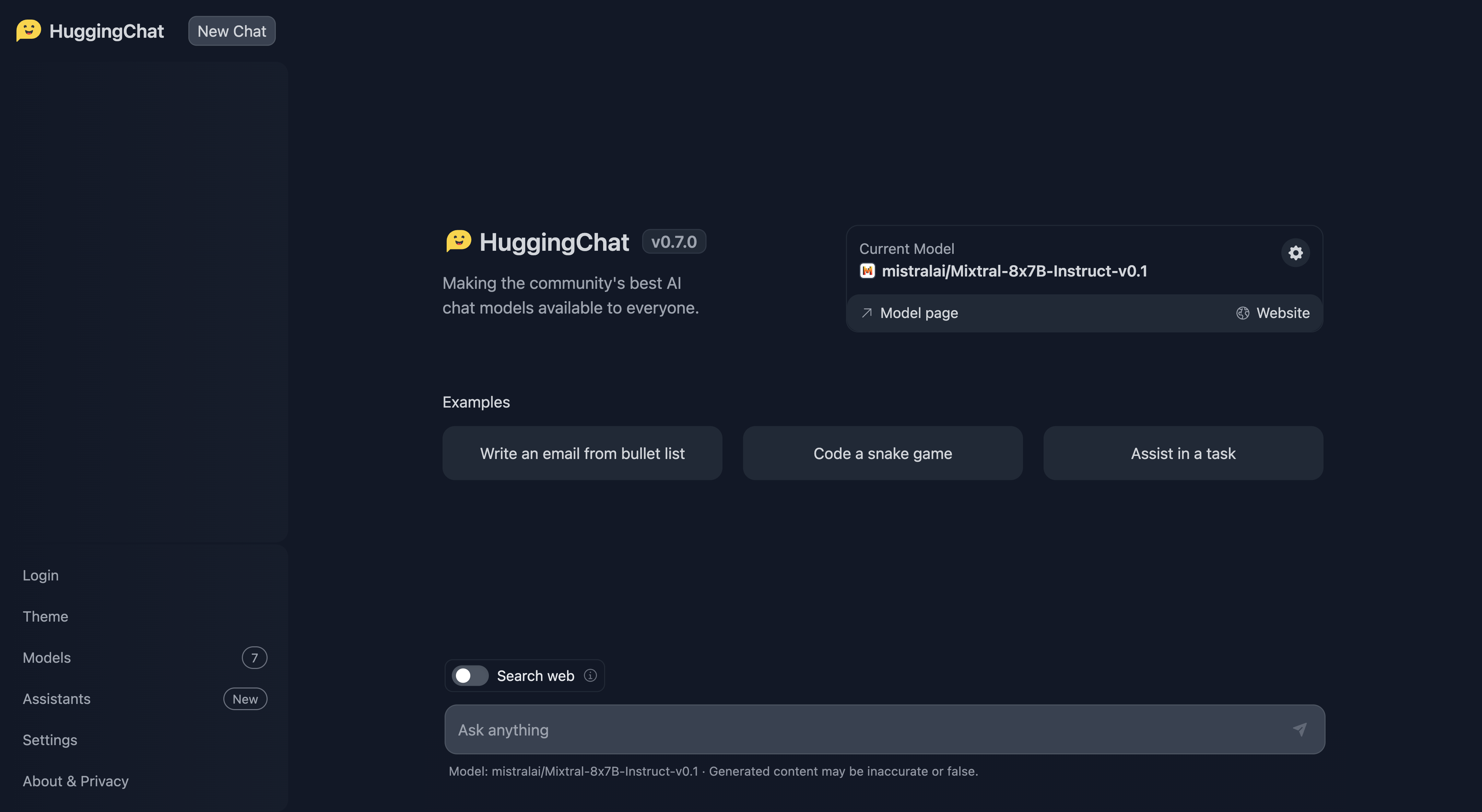

In April 2023, they launched HuggingChat, an open-source generative AI.

HuggingChat

HuggingChat

Their notable project, BLOOM, a 176 billion parameter large language model, was released in July 2022, showing their commitment to large language models. BLOOM, much like GPT-3, supports multiple languages and programming languages.

Hugging Face also offers autoML solutions and the Hugging Face Hub platform for hosting code repositories and discussions.

Their NLP library goals to democratize NLP by providing datasets and tools. Popular amongst big tech corporations, Hugging Face manages BigScience, a research initiative with 900 researchers training models on a large multilingual dataset.

Models like BERT and DistilBERT see significant weekly downloads, and StarCoder, their AI coding assistant, supports 80 programming languages.

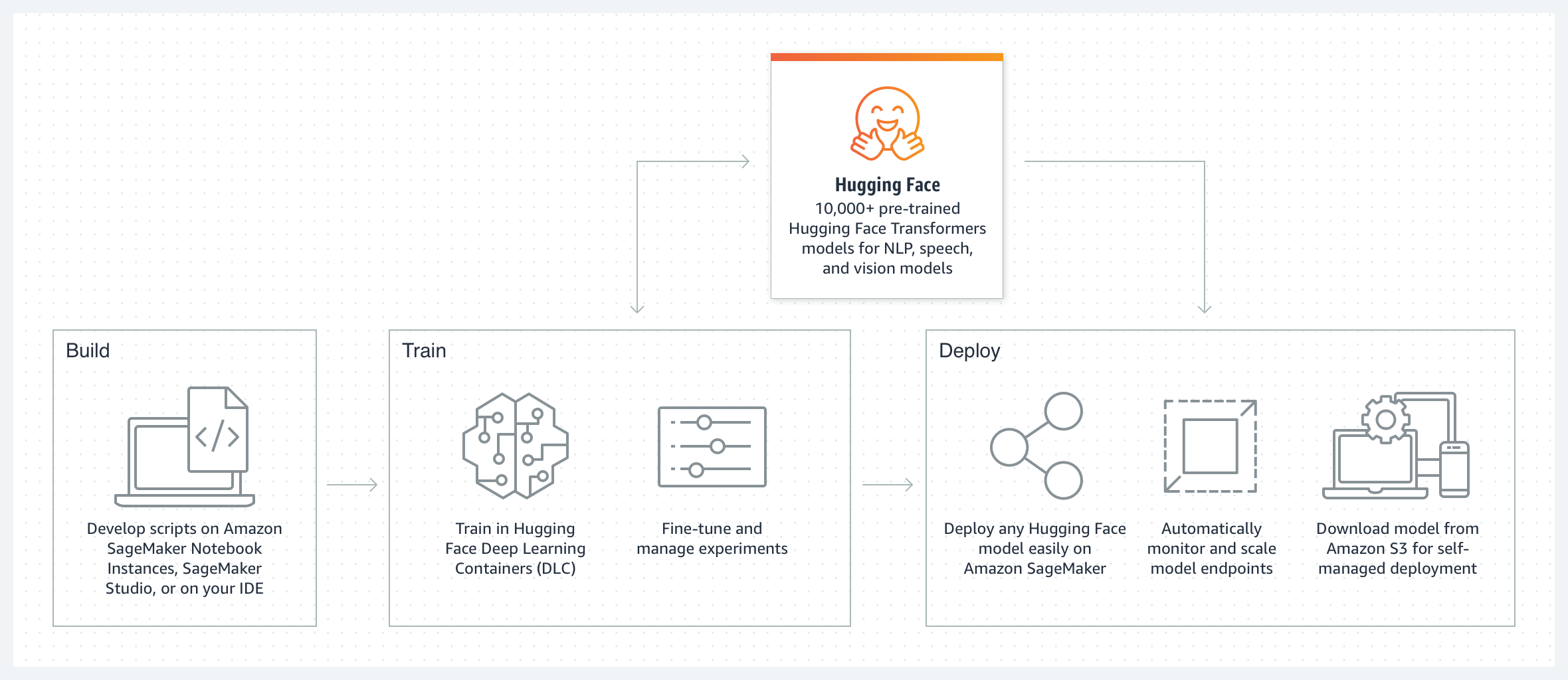

Collaborating with AWS, Hugging Face offers Deep Learning Containers for NLP model deployment on Amazon SageMaker.

How Many Models and Datasets Are Hosted by Hugging Face?

With over 300,000 models, 250,000 datasets, and 250,000 spaces, it provides probably the most extensive collection available.

The Hugging Face Hub hosts over 350,000 models, 75,000 datasets, and 150,000 demo apps, fostering collaboration and innovation. With support for over 130 architectures and greater than 75,000 datasets in over 100 languages, users have access to a various range of resources.

Additionally, Hugging Face hosts popular machine-learning models like BERT and GPT-2, together with a Metrics library for evaluating model predictions.

Hugging Face Employee Data

Hugging Face has grown its workforce to 279 employees, marking a notable 39% increase during the last 12 months, signaling significant expansion.

With an estimated annual revenue of $40 million, the corporate’s revenue per worker is roughly $143,487.

Furthermore, Hugging Face has raised a complete of $235 million in funding and currently holds a valuation of $4 billion as of August 2023.

Here is a table summarizing the worker data statistics for Hugging Face:

| Metric | Value |

|---|---|

| Number of Employees | 279 |

| Employee Growth | 39% |

| Estimated Annual Revenue | $40 million |

| Revenue per Employee | $143,487 |

| Total Funding | $235 million |

| Valuation | $4 billion |

How Many Stars Does Hugging Face Has on GitHub?

Hugging Face has a considerable variety of stars on GitHub indicating its popularity and community engagement.

Hugging Face’s Transformers tool has 121,000 stars on GitHub, often seen as a measure of success for developer tools.

For comparison, PyTorch, Meta’s popular machine-learning framework, has 76,000 stars, and Google’s TensorFlow has 181,000 stars. Snowflake’s Streamlit has 30,500 stars in comparison with Gradio’s 26,000.

And That’s a Wrap

Here are all of the essential statistics you required about Hugging Face.

The remarkable growth of Hugging Face, particularly with their progressive AI models and collaborative platform, is really impressive.

Now, over to you:

Will Hugging Face sustain this rapid growth momentum? Do you anticipate continued expansion from Hugging Face in the long run?

If you may have any further inquiries or thoughts on this topic, please be at liberty to share them within the comments below.

Let’s engage in a discussion about it.

This article was originally published at www.greataiprompts.com