Q-Star: OpenAI’s Exploration of Q-Learning in Pursuit of Artificial General Intelligence (AGI)

This article is predicated on a Reuters news article from 2023-11-22 titled OpenAI Researchers Warned Board of AI Breakthrough Ahead of CEO Ouster

Q-learning, a cornerstone in artificial intelligence, is integral to reinforcement learning. This model-free algorithm goals to discern the worth of actions inside specific states, striving to determine an optimal policy that maximizes rewards over time.

Fundamentals of Q-Learning

At its core, Q-learning hinges on the Q-function, or state-action value function. This function evaluates the expected total reward from a given state and motion, following the optimal policy.

The Q-Table: A key feature in simpler Q-learning applications is the Q-table. Each state is represented by a row, and every motion by a column. The Q-values, reflecting the state-action pairs, are continually updated because the agent learns from its environment.

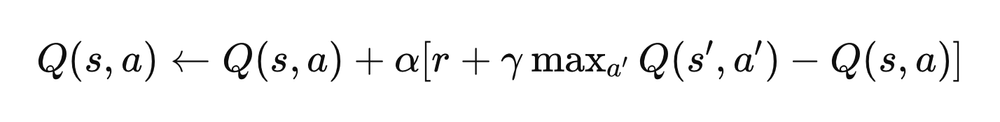

The Update Rule: Q-learning’s essence is encapsulated in its update formula:

Exploration vs. Exploitation: Balancing recent experiences and utilizing known information is crucial. Strategies just like the ε-greedy method manage this balance by alternating between exploration and exploitation based on a set probability.

Q-Learning’s Role in Advancing AGI

AGI encompasses an AI’s capability to broadly apply its intelligence, much like human cognitive abilities. While Q-learning is a step on this direction, it faces several hurdles:

-

Scalability: Q-learning’s applicability to large state-action spaces is restricted, a critical issue for AGI’s diverse problem-solving needs.

-

Generalization: AGI requires extrapolating from learned experiences to recent situations, a challenge for Q-learning which generally needs specific training for every scenario.

-

Adaptability: AGI’s dynamic adaptability to evolving environments is at odds with Q-learning’s need for stable environments.

-

Integration of Cognitive Skills: AGI involves a mix of assorted skills, including reasoning and problem-solving, beyond Q-learning’s learning-focused approach.

Progress and Future Outlook

-

Deep Q-Networks (DQN): Merging Q-learning with deep neural networks, DQNs are higher suited to complex tasks resulting from their ability to handle high-dimensional spaces.

-

Transfer Learning: Techniques allowing Q-learning models to use knowledge across different domains hint on the generalization required for AGI.

-

Meta-Learning: Integrating meta-learning into Q-learning could enable AI to refine its learning strategies, a key component for AGI.

In its quest for AGI, OpenAI’s deal with Q-learning inside Reinforcement Learning from Human Feedback (RLHF) is a noteworthy endeavor.

This article was originally published at www.artificial-intelligence.blog