Can humans learn to reliably detect AI-generated fakes? How do they impact us on a cognitive level?

OpenAI’s Sora system recently previewed a brand new wave of synthetic AI-powered media. It probably won’t be long before any type of realistic media – audio, video, or image – might be generated with prompts in mere seconds.

As these AI systems grow ever more capable, we’ll have to hone latest skills in critical pondering to separate truth from fiction.

To date, Big Tech’s efforts to slow or stop deep fakes have come to little aside from sentiment, not due to an absence of conviction but because AI content is so realistic.

That makes it tough to detect on the pixel level, while other signals, like metadata and watermarks, have their flaws.

Moreover, even when AI-generated content was detectable at scale, it’s difficult to separate authentic, purposeful content from that intended to spread misinformation.

Passing content to human reviewers and using ‘community notes’ (information attached to content, often seen on X) provides a possible solution. However, this sometimes involves subjective interpretation and risks incorrect labeling.

For example, within the Israel-Palestine conflict, we’ve witnessed disturbing images labeled as real once they were fake and vice versa.

When an actual image is labeled fake, this may create a ‘liar’s dividend,’ where someone or something can brush off the reality as fake.

So, within the absence of technical methods for stopping deep fakes on the technology side, what can we do about it?

And, to what extent do deep fakes impact our decision-making and psychology?

For example, when persons are exposed to fake political images, does this have a tangible impact on their voting behaviors?

Let’s take a have a look at a few studies that assess precisely that.

Do deep fakes affect our opinions and psychological states?

One 2020 study, “Deepfakes and Disinformation: Exploring the Impact of Synthetic Political Video on Deception, Uncertainty, and Trust in News,” explored how deep fake videos influence public perception, particularly regarding trust in news shared on social media.

The research involved a large-scale experiment with 2,005 participants from the UK, designed to measure responses to various kinds of deep fake videos of former US President Barack Obama.

Participants were randomly assigned to view one in every of three videos:

- A 4-second clip showing Obama making a surprising statement with none context.

- A 26-second clip that included some hints concerning the video’s artificial nature but was primarily deceptive.

- A full video with an “educational reveal” where the deep fake’s artificial nature was explicitly disclosed, featuring Jordan Peele explaining the technology behind the deep fake.

Key findings

The study explored three key areas:

- Deception: The study found minimal evidence of participants believing the false statements within the deep fakes. The percentage of participants who were misled by the deep fakes was relatively low across all treatment groups.

- Uncertainty: However, a key result was increased uncertainty amongst viewers, especially those that saw the shorter, deceptive clips. About 35.1% of participants who watched the 4-second clip and 36.9% who saw the 26-second clip reported feeling uncertain concerning the video’s authenticity. In contrast, only 27.5% of those that viewed the complete educational video felt this fashion.

- Trust in news: This uncertainty negatively impacted participants’ trust in news on social media. Those exposed to the deceptive deep fakes showed lower trust levels than those that viewed the tutorial reveal.

A big proportion of individuals were deceived or uncertain about different video types. Source: Sage Journals.

This shows that exposure to deep fake imagery causes longer-term uncertainty.

Over time, fake imagery might weaken faith in all information, including the reality.

Similar results were demonstrated by a more moderen 2023 study, “Face/Off: Changing the face of flicks with deepfake,” which also concluded that fake imagery has long-term impacts.

People ‘remember’ fake content after exposure

Conducted with 436 participants, the Face/Off study investigated how deep fakes might influence our recollection of movies.

Participants took part in a web-based survey designed to look at their perceptions and memories of each real and imaginary movie remakes.

The survey’s core involved presenting participants with six movie titles, which included a combination of 4 actual film remakes and two fictitious ones.

Movies were randomized and presented in two formats: half of the films were introduced through short text descriptions, and the opposite half were paired with temporary video clips.

Fictitious movie remakes consisted of versions of “The Shining,” “The Matrix,” “Indiana Jones,” and “Captain Marvel,” complete with descriptions falsely claiming the involvement of high-profile actors in these non-existent remakes.

For example, participants were told a couple of fake remake of “The Shining” starring Brad Pitt and Angelina Jolie, which never happened.

In contrast, the actual movie remakes presented within the survey, equivalent to “Charlie & The Chocolate Factory” and “Total Recall,” were described accurately and accompanied by real film clips. This mixture of real and pretend remakes was intended to analyze how participants discern between factual and fabricated content.

Participants were queried about their familiarity with each movie, asking in the event that they had seen the unique film or the remake or had any prior knowledge of them.

Key findings

- False memory phenomenon: A key end result of the study is the revelation that almost half of the participants (49%) developed false memories of watching fictitious remakes, equivalent to imagining Will Smith as Neo in “The Matrix.” This illustrates the enduring effect that suggestive media, whether deep fake videos or textual descriptions, can have on our memory.

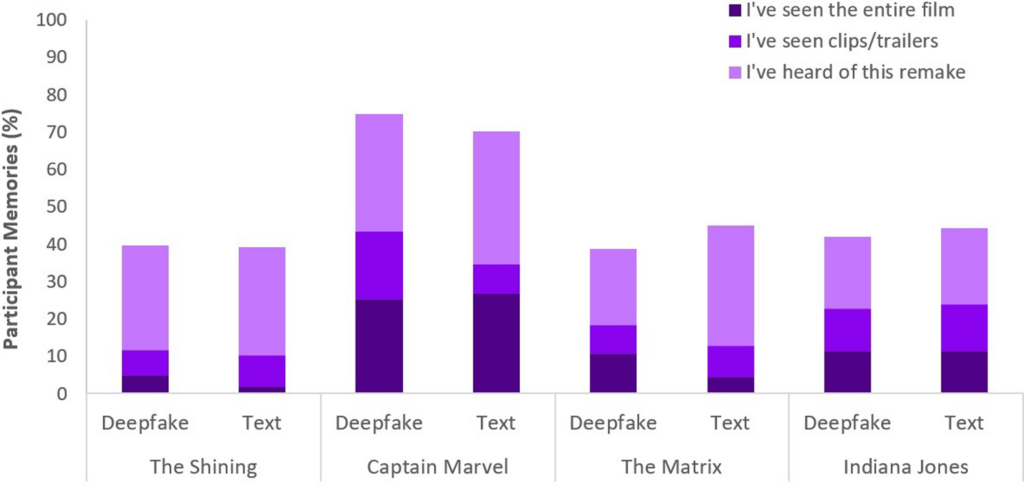

- Specifically, “Captain Marvel” topped the list, with 73% of participants recalling its AI remake, followed by “Indiana Jones” at 43%, “The Matrix” at 42%, and “The Shining” at 40%. Among those that mistakenly believed in these remakes, 41% thought the “Captain Marvel” remake was superior to the unique.

- Comparative influence of deep fakes and text: Another discovery is that deep fakes, despite their visual and auditory realism, were no simpler in altering participants’ memories than textual descriptions of the identical fictitious content. This suggests that the format of the misinformation – visual or textual – doesn’t significantly alter its impact on memory distortion throughout the context of film.

Memory responses for every of the 4 fictitious movie remakes. For example, a lot of people said they ‘remembered’ a false remake of Captain Marvel. Source: PLOS One.

Memory responses for every of the 4 fictitious movie remakes. For example, a lot of people said they ‘remembered’ a false remake of Captain Marvel. Source: PLOS One.

The false memory phenomenon involved on this study is widely researched. It shows how humans effectively construct or reconstruct false memories we’re certain are real once they’re not.

Everyone is prone to constructing false memories, and deep fakes appear to activate this behavior, meaning viewing certain content can change our perception, even once we consciously understand it’s inauthentic.

In each studies, deep fakes had tangible, potentially long-term impacts. The effect might sneak up on us and accumulate over time.

We also have to do not forget that fake content circulates to tens of millions of individuals, so small changes in perception scale across the worldwide population.

What will we do about deep fakes?

Going to war with deep fakes means combating the human brain.

While the rise of pretend news and misinformation has forced people to develop latest media literacy in recent times, AI-generated synthetic media would require a brand new level of adjustment.

We have confronted such inflection points before, from photography to CGI computer graphics, but AI will demand an evolution of our critical senses.

Today, we must transcend merely believing our eyes and rely more on analyzing sources and contextual clues.

It’s essential to interrogate the contents’ incentives or biases. Does it align with known facts or contradict them? Is there corroborating evidence from other trustworthy sources?

Another key aspect is establishing legal standards for identifying faked or manipulated media and holding creators accountable.

The US DEFIANCE Act, UK Online Safety Act, and equivalents worldwide are establishing legal procedures for handling deep fakes. How effective they’ll be stays to be seen.

Strategies for unveiling the reality

Let’s conclude with five strategies for identifying and interrogating potential deep fakes.

While no single strategy is flawless, fostering critical mindsets is the most effective thing we are able to do collectively to reduce the impact of AI misinformation.

- Source verification: Examining the credibility and origin of knowledge is a fundamental step. Authentic content often originates from reputable sources with a track record of reliability.

- Technical evaluation: Despite their sophistication, deep fakes may exhibit subtle flaws, equivalent to irregular facial expressions or inconsistent lighting. Scrutinize content and consider whether it’s digitally altered.

- Cross-referencing: Verifying information against multiple trusted sources can provide a broader perspective and help confirm the authenticity of content.

- Digital literacy: Understanding the capabilities and limitations of AI technologies is vital to assessing content. Education in digital literacy across schools and the media, including the workings of AI and its ethical implications, will likely be crucial.

- Cautious interaction: Interacting with AI-generated misinformation amplifies its effects. Be careful when liking, sharing, or reposting content you’re dubious about.

As deep fakes evolve, so will the techniques required to detect and mitigate harm. 2024 will likely be revealing, as around half the world’s population is about to vote in major elections.

Evidence suggests that deep fakes can affect our perception, so it’s removed from outlandish to think that AI misinformation could materially impact outcomes.

As we move forward, ethical AI practices, digital literacy, regulation, and significant engagement will likely be pivotal in shaping a future where technology amplifies, slightly than obscures, the essence of the reality.

The post Deep fakes, deep impacts: critical pondering within the AI era appeared first on DailyAI.

This article was originally published at dailyai.com