While COVID-19 forced an emergency transformation to online learning at universities, learning teach efficiently and effectively online using different platforms and tools is a positive addition to education and is here to remain.

To sustain this useful evolution and ensure quality education, universities should concentrate on supporting faculty to embrace and lead the change.

The ethical and strategic use of artificial intelligence at centres of teaching and learning, which support faculty in troubleshooting and innovating their online teaching practices, can assist with this task. Centres of teaching and learning are answerable for educational technology support, teaching and learning support, in addition to instructional design.

Expansive move to online education

Research conducted at 19 centres of teaching and learning and their equivalents from Canada, the United States, Lebanon, the United Kingdom and France published in August 2020 showed that staff in these centres deployed all available resources to support the rapid switch to online education.

Staff had been working 10- to 14-hour workdays in the course of the first phase of the pandemic to fulfill the rise in faculty and staff needs. These centres also reported difficulty recruiting and training qualified candidates.

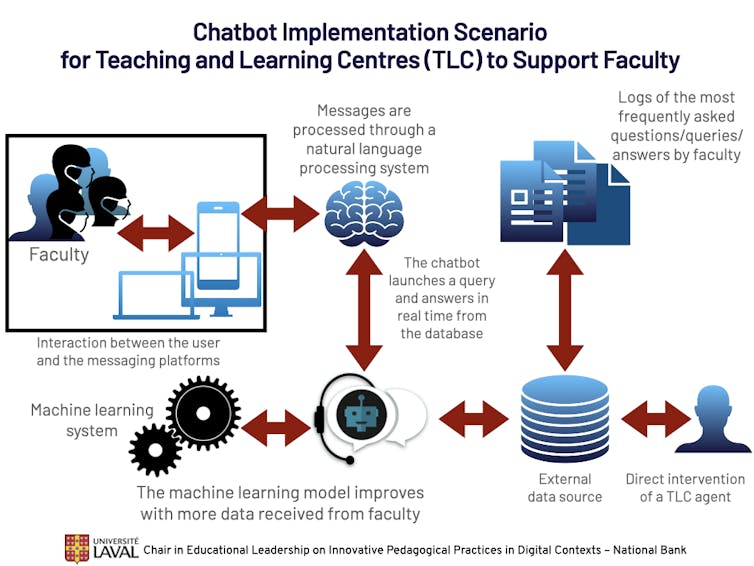

Used strategically, chatbots could take over repetitive low-level guidance tasks that teaching and learning centres field and help avoid overload. A chatbot, also called a conversational or virtual agent, is a software or computer system designed to speak with humans using natural language processing.

This communication may be via text messages or voice commands.

Why a chatbot?

Chatbots offer a viable, win-win solution to teaching and learning centres and to school. They can be found 24/7, can reply to hundreds of simultaneous requests and supply fast and robust service support when needed.

Using chatbots could free teams for complex inquiries that require human interventions, similar to transforming teaching approaches and collaborating to innovate solutions to answer problems like improving equity and access in online teaching.

A collaboration between the centres’ experts and technology could provide higher services and support for faculty to enhance the training experiences they create for college kids. Chatbots can guide faculty towards appropriate and effective resources and skilled development activities, similar to how-to articles, tutorials and upcoming workshops. These can be tailored to suit faculties’ individual needs, their varied digital skills levels and backgrounds in designing hybrid learning experiences.

Chatbot systems are already utilized in educational institutions for teaching and learning, to deliver administrative tasks, to advise students and assist them in research.

How wouldn’t it work?

Two options are possible in terms of chatbots’ AI conversational ability:

-

Artificial Intelligence Markup Language methodology: Programmers give the AI a library of questions/answers and keyword associations through a database. From there, the chatbot is in a position to provide appropriate answers in a strictly defined frame.

-

The Natural Language Processing approach: This allows for more flexibility. Once programmers construct an initial dataset, the AI-powered tool will then learn from ongoing exchanges to seek out the most effective combination of answers to recurring questions asked by faculty members. The AI will then have the option to discover keywords in a sentence and understand the context of a matter.

That programmers would wish so as to add data from the conversation to an ongoing dataset constructing throughout time is anticipated. When asked a matter, the chatbot will respond based on its current knowledge database. If the conversation introduces an idea that it isn’t programmed to grasp, the chatbot can state it doesn’t understand the query — or pass the communication to a human operator.

Either way, the chatbot will even learn from this interaction in addition to future interactions. Thus, the chatbot will step by step grow in scope and gain relevance.

(Nadia Naffi)

For chatbots’ reliability and trustworthiness to extend, it ought to be effective in helping these centres supporting their faculty. Implementing and wonderful tuning chatbots so that they are ready to be used is essential, even when it requires an investment of time and resources.

Ethical framework for AI in education

The Institute for Ethical AI in Education, based on the University of Buckingham within the United Kingdom, and funded by McGraw Hill, Microsoft Corporation, Nord Anglia Education and Pearson PLC, released The Ethical Framework for AI in Education in 2020. The framework argues AI systems should increase the capability of organizations and the autonomy of learners while respecting human relationships and ensuring human control.

Chatbots in university settings ought to be ethical by design, meaning that they ought to be designed to be sensitive to values like security, safety and accountability and transparency. If utilized in centres of teaching and learning, users ought to be protected against all types of harm or abuse. They also have to feel treated fairly and to at all times be provided the choice to succeed in a human. Faculty members must know they’re exchanging with an AI.

Chatbots can and ought to be accessible. Tolerating user errors and input variation, being designed for diverse abilities and allowing multilingual texting communication are examples of facilitating accessibility.

Do no harm: privacy

Chatbots ought to be designed to “do no harm,” as per the UNESCO’s recent recommendations on the ethics of artificial intelligence. When talking about non-maleficence, privacy ought to be addressed.

AI-powered tools include data recording issues. Strong barriers in data collection and storage are needed. Following the European approach to data protection, centres should minimize data collection. Only required information ought to be stored, similar to specific parts of conversations, but not the interlocutors’ identity.

The transparency-based approach allows for users to agree on which personal data may be shared or not with the centres. This would help keep trust and usefulness of the tool high. In case of malfunction, faculty members would offer feedback on the issue, and centres would fix it.

Centres should consider anonymization of users, strong encryption of all data stored, and in-house storage when possible or by a trusted contracted third party following similar data privacy rules.

(Shutterstock)

Addressing bias, environmental impact

The possible bias within the initial database must be addressed. Whether it pertains to gender, ethnicity, language or other variables, the initial dataset must be cleaned and punctiliously analyzed prior to getting used to coach the AI, whether AI markup language or NLP methods are deployed. If the latter is applied, ongoing monitoring ought to be considered.

A green data storage solution must be addressed to cut back the CO2 cost of activity on the environment. For example, universities might investigate if water cooling systems might be utilized in server rooms as a substitute of air con.

The university of the longer term as anticipated by many scholars and policy makers has already began. Technology, if used ethically and strategically, can support faculty of their mission to organize their students for the needs of our society and the longer term of labor.

This article was originally published at theconversation.com